PReLU#

- class torch.nn.modules.activation.PReLU(num_parameters=1, init=0.25, device=None, dtype=None)[source]#

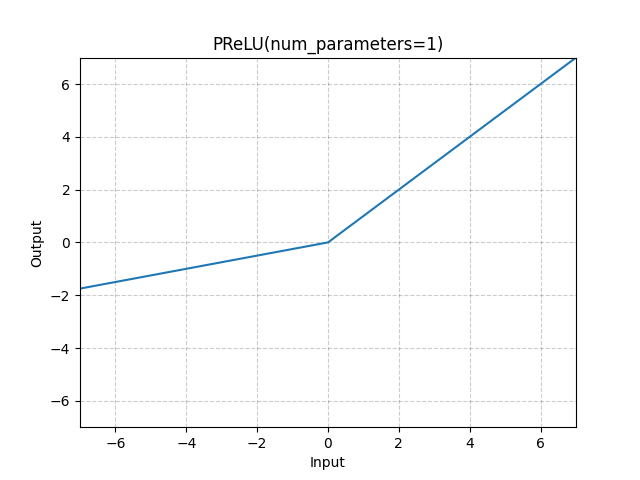

Applies the element-wise PReLU function.

or

Here is a learnable parameter. When called without arguments, nn.PReLU() uses a single parameter across all input channels. If called with nn.PReLU(nChannels), a separate is used for each input channel.

Note

weight decay should not be used when learning for good performance.

Note

Channel dim is the 2nd dim of input. When input has dims < 2, then there is no channel dim and the number of channels = 1.

- Parameters:

- Shape:

Input: where * means, any number of additional dimensions.

Output: , same shape as the input.

- Variables:

weight (Tensor) – the learnable weights of shape (

num_parameters).

Examples:

>>> m = nn.PReLU() >>> input = torch.randn(2) >>> output = m(input)