MultiplicativeLR#

- class torch.optim.lr_scheduler.MultiplicativeLR(optimizer, lr_lambda, last_epoch=-1)[source]#

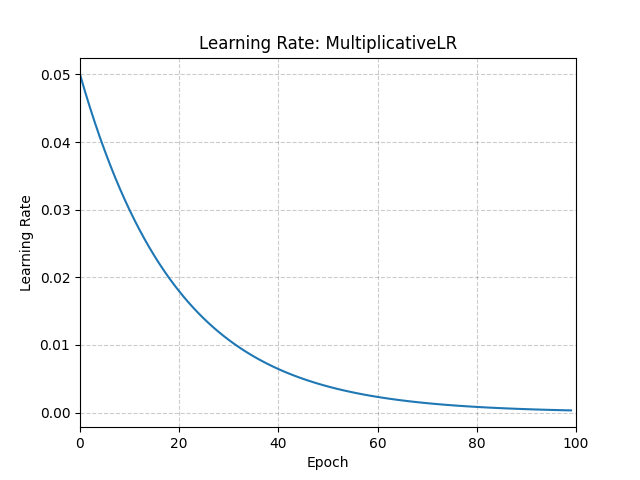

Multiply the learning rate of each parameter group by the factor given in the specified function.

When last_epoch=-1, set initial lr as lr.

- Parameters:

Example

>>> lmbda = lambda epoch: 0.95 >>> scheduler = MultiplicativeLR(optimizer, lr_lambda=lmbda) >>> for epoch in range(100): >>> train(...) >>> validate(...) >>> scheduler.step()

- get_last_lr()[source]#

Get the most recent learning rates computed by this scheduler.

- Returns:

A

listof learning rates with entries for each of the optimizer’sparam_groups, with the same types as theirgroup["lr"]s.- Return type:

Note

The returned

Tensors are copies, and never alias the optimizer’sgroup["lr"]s.

- get_lr()[source]#

Compute the next learning rate for each of the optimizer’s

param_groups.Scales the current

group["lr"]s in each of the optimizer’sparam_groupsby the outputs of thelr_lambdasatlast_epoch.- Returns:

A

listof learning rates for each of the optimizer’sparam_groupswith the same types as their currentgroup["lr"]s.- Return type:

Note

If you’re trying to inspect the most recent learning rate, use

get_last_lr()instead.Note

The returned

Tensors are copies, and never alias the optimizer’sgroup["lr"]s.

- load_state_dict(state_dict)[source]#

Load the scheduler’s state.

- Parameters:

state_dict (dict) – scheduler state. Should be an object returned from a call to

state_dict().

- state_dict()[source]#

Return the state of the scheduler as a

dict.It contains an entry for every variable in

self.__dict__which is not the optimizer. The learning rate lambda functions will only be saved if they are callable objects and not if they are functions or lambdas.

- step(epoch=None)[source]#

Step the scheduler.

- Parameters:

epoch (int, optional) –

Deprecated since version 1.4: If provided, sets

last_epochtoepochand uses_get_closed_form_lr()if it is available. This is not universally supported. Usestep()without arguments instead.

Note

Call this method after calling the optimizer’s

step().