CosineAnnealingWarmRestarts#

- class torch.optim.lr_scheduler.CosineAnnealingWarmRestarts(optimizer, T_0, T_mult=1, eta_min=0.0, last_epoch=-1)[source]#

Set the learning rate of each parameter group using a cosine annealing schedule.

The is set to the initial lr, is the number of epochs since the last restart and is the number of epochs between two warm restarts in SGDR:

When , set . When after restart, set .

It has been proposed in SGDR: Stochastic Gradient Descent with Warm Restarts.

- Parameters:

optimizer (Optimizer) – Wrapped optimizer.

T_0 (int) – Number of iterations until the first restart.

T_mult (int, optional) – A factor by which increases after a restart. Default: 1.

eta_min (float, optional) – Minimum learning rate. Default: 0.

last_epoch (int, optional) – The index of the last epoch. Default: -1.

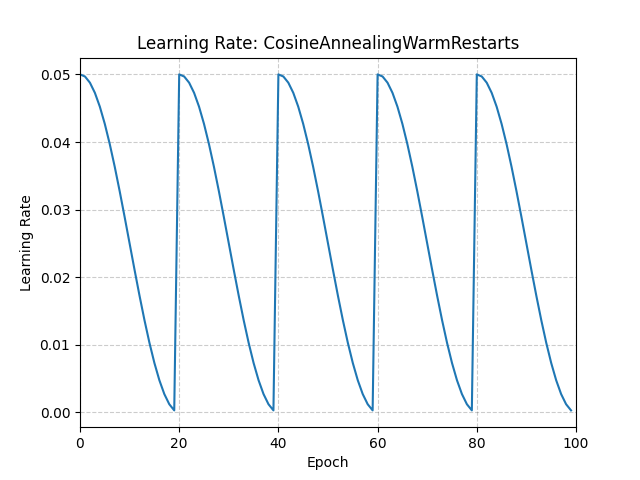

Example

>>> optimizer = torch.optim.SGD(model.parameters(), lr=0.05) >>> scheduler = torch.optim.lr_scheduler.CosineAnnealingWarmRestarts( ... optimizer, T_0=20 ... ) >>> for epoch in range(100): >>> train(...) >>> validate(...) >>> scheduler.step()

- get_last_lr()[source]#

Get the most recent learning rates computed by this scheduler.

- Returns:

A

listof learning rates with entries for each of the optimizer’sparam_groups, with the same types as theirgroup["lr"]s.- Return type:

Note

The returned

Tensors are copies, and never alias the optimizer’sgroup["lr"]s.

- get_lr()[source]#

Compute the next learning rate for each of the optimizer’s

param_groups.Computes learning rates for the optimizer’s

param_groupsfollowing:Where

T_curis the number of epochs since the last restart andT_iis the number of epochs between two restarts. BothT_curandT_iare updated instep(), andT_ibecomesT_multtimes larger after each restart.- Returns:

A

listof learning rates for each of the optimizer’sparam_groupswith the same types as their currentgroup["lr"]s.- Return type:

Note

If you’re trying to inspect the most recent learning rate, use

get_last_lr()instead.Note

The returned

Tensors are copies, and never alias the optimizer’sgroup["lr"]s.

- load_state_dict(state_dict)[source]#

Load the scheduler’s state.

- Parameters:

state_dict (dict) – scheduler state. Should be an object returned from a call to

state_dict().

- state_dict()[source]#

Return the state of the scheduler as a

dict.It contains an entry for every variable in

self.__dict__which is not the optimizer.

- step(epoch=None)[source]#

Step could be called after every batch update.

Example

>>> scheduler = CosineAnnealingWarmRestarts(optimizer, T_0, T_mult) >>> iters = len(dataloader) >>> for epoch in range(20): >>> for i, sample in enumerate(dataloader): >>> inputs, labels = sample['inputs'], sample['labels'] >>> optimizer.zero_grad() >>> outputs = net(inputs) >>> loss = criterion(outputs, labels) >>> loss.backward() >>> optimizer.step() >>> scheduler.step(epoch + i / iters)

This function can be called in an interleaved way.

Example

>>> scheduler = CosineAnnealingWarmRestarts(optimizer, T_0, T_mult) >>> for epoch in range(20): >>> scheduler.step() >>> scheduler.step(26) >>> scheduler.step() # scheduler.step(27), instead of scheduler(20)