Note

Go to the end to download the full example code.

Mosaic: Memory Profiling for PyTorch#

Author: Basil Wong

How to capture and analyze PyTorch memory snapshots

Identify memory savings from activation checkpointing

Debug unexpected memory usage from abandoned code

Integrate memory analysis into training pipelines

PyTorch v2.0.0 or later

CUDA-capable GPU

Basic understanding of PyTorch training loops

This tutorial demonstrates how to use Mosaic, a post-processing memory snapshot analysis tool for PyTorch. Mosaic helps analyze GPU memory usage in distributed deep learning, providing detailed insights into memory allocations, peak usage, and memory imbalances across parallel workers.

Mosaic was instrumental in debugging OOM issues during the 405B LLaMA training and is now open source.

Introduction to Mosaic#

Overview#

In distributed deep learning, understanding GPU memory usage is critical for optimizing training efficiency and debugging Out-of-Memory (OOM) errors. Mosaic is a post-analysis tool for memory usage designed to work with large-scale jobs. It helps analyze PyTorch memory snapshots captured during the execution of PyTorch training jobs, providing detailed insights into memory allocations, peak usage, and memory imbalances across parallel workers.

Getting Started#

Clone the mosaic repository and install from the mosaic directory:

git clone https://github.com/facebookresearch/mosaic

cd mosaic

python3 -m venv venv

source venv/bin/activate

pip3 install -r requirements.txt

pip3 install -e .

Alternatively, install directly via pip:

pip install git+https://github.com/facebookresearch/mosaic.git

Simple Usage Examples#

1. Peak Memory Usage Analysis

When addressing memory problems like OOM errors, focusing on peak memory

usage is crucial. The mosaic_get_memory_usage_peak command presents a

stack trace of the memory allocations that contributed to the peak memory

usage:

mosaic_get_memory_usage_peak --snapshot <path to snapshot>

2. Categorical Memory Profiling

Mosaic classifies allocations into categories (activation, backward, optimizer, etc.):

Activation Memory: Tensors saved for backward pass

Gradient Memory: Gradients computed during backpropagation

Optimizer State: Adam/SGD momentum and variance buffers

Parameter Memory: Model weights

mosaic_get_memory_profile --snapshot <path> --out-path <html> \

--profile categories

An example HTML output looks like:

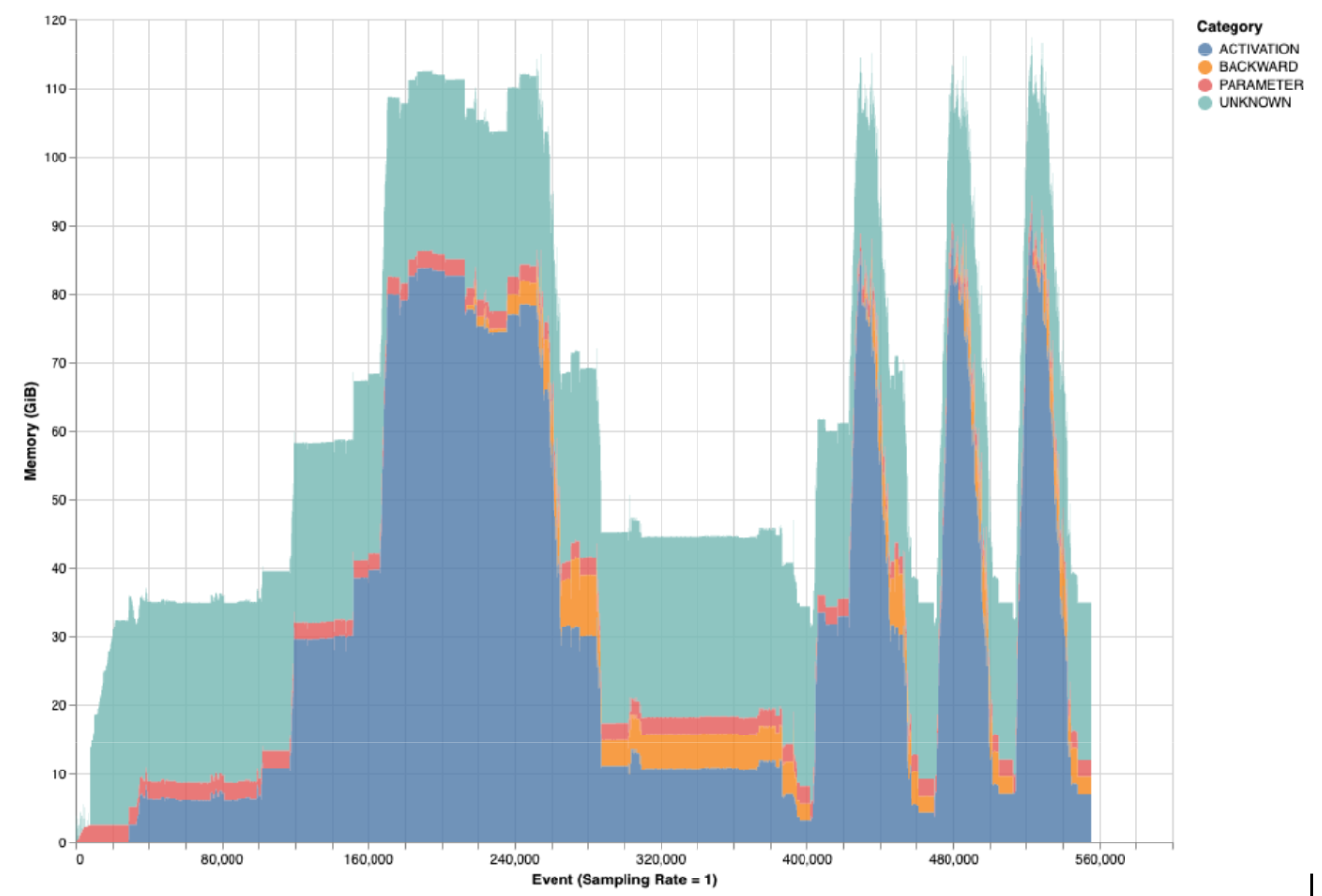

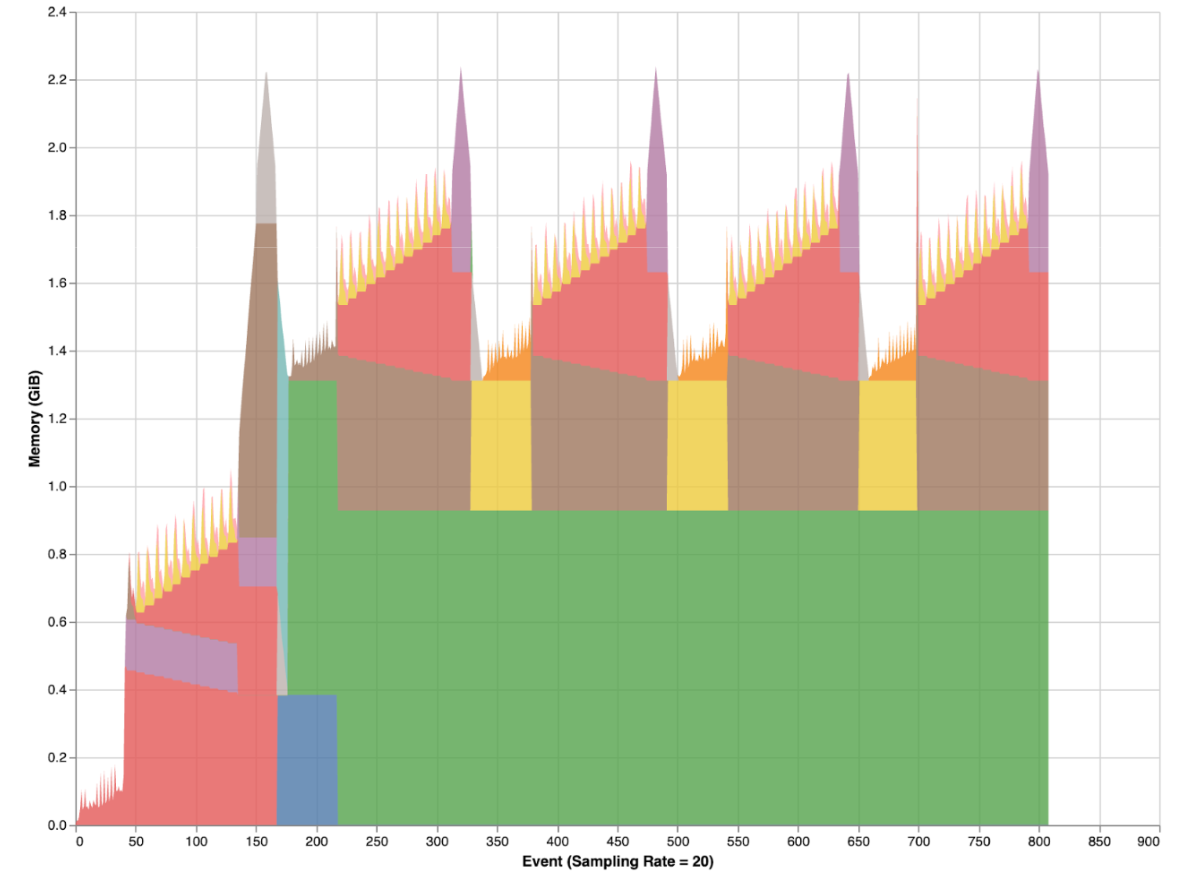

Categorical memory profiling showing memory breakdown by type (activation, gradient, optimizer, etc.)#

To maintain allocation order for the categories, add --preserve-allocation-order:

mosaic_get_memory_profile --snapshot <path> --out-path <html> \

--profile categories --preserve-allocation-order

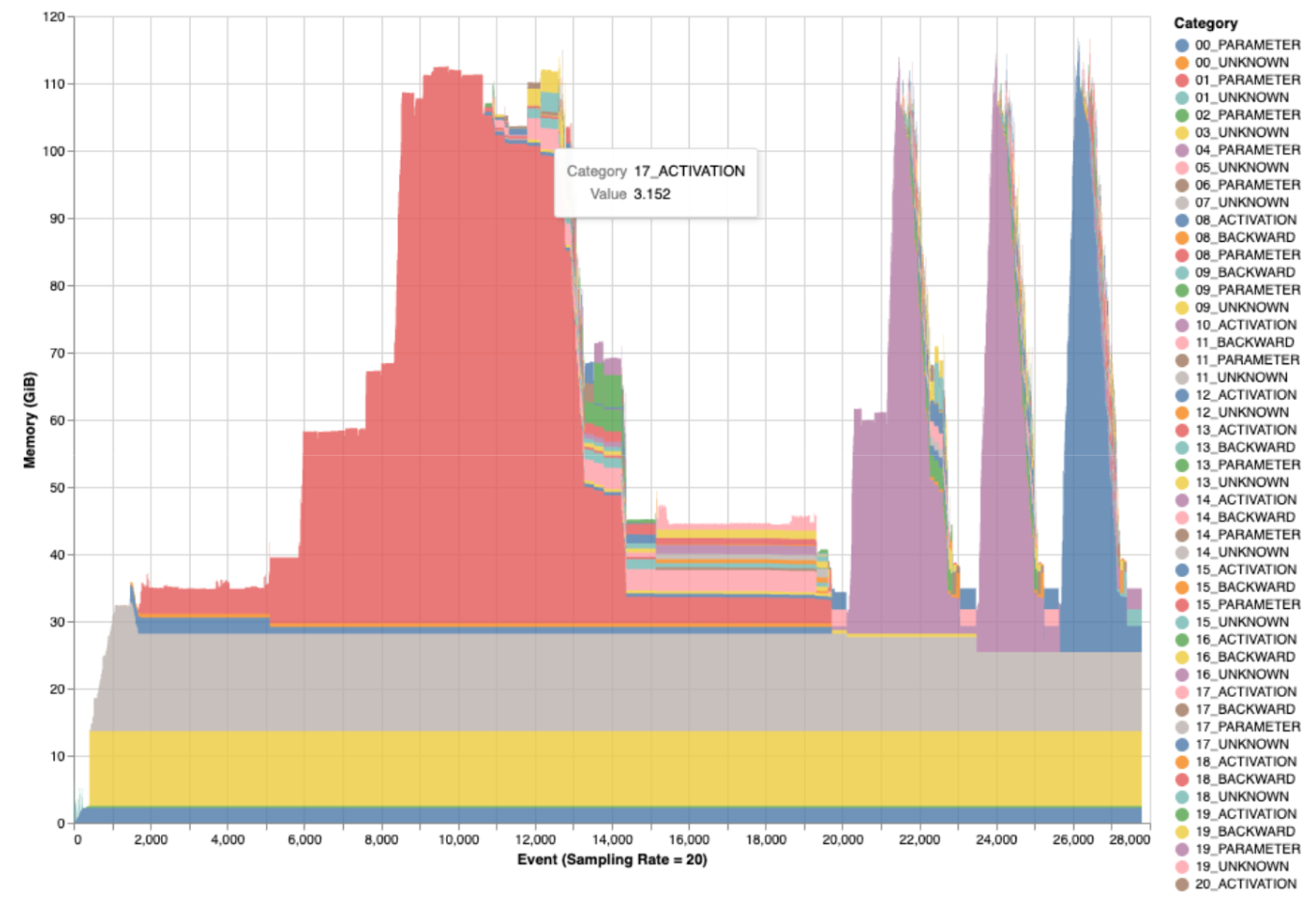

Categorical profiling with --preserve-allocation-order shows memory

allocations in chronological order#

3. Custom Dictionary Profiling

For targeted analysis via regex pattern matching:

mosaic_get_memory_profile --snapshot <path> --profile custom \

--custom-profile '{"ncclx": "ncclx"}'

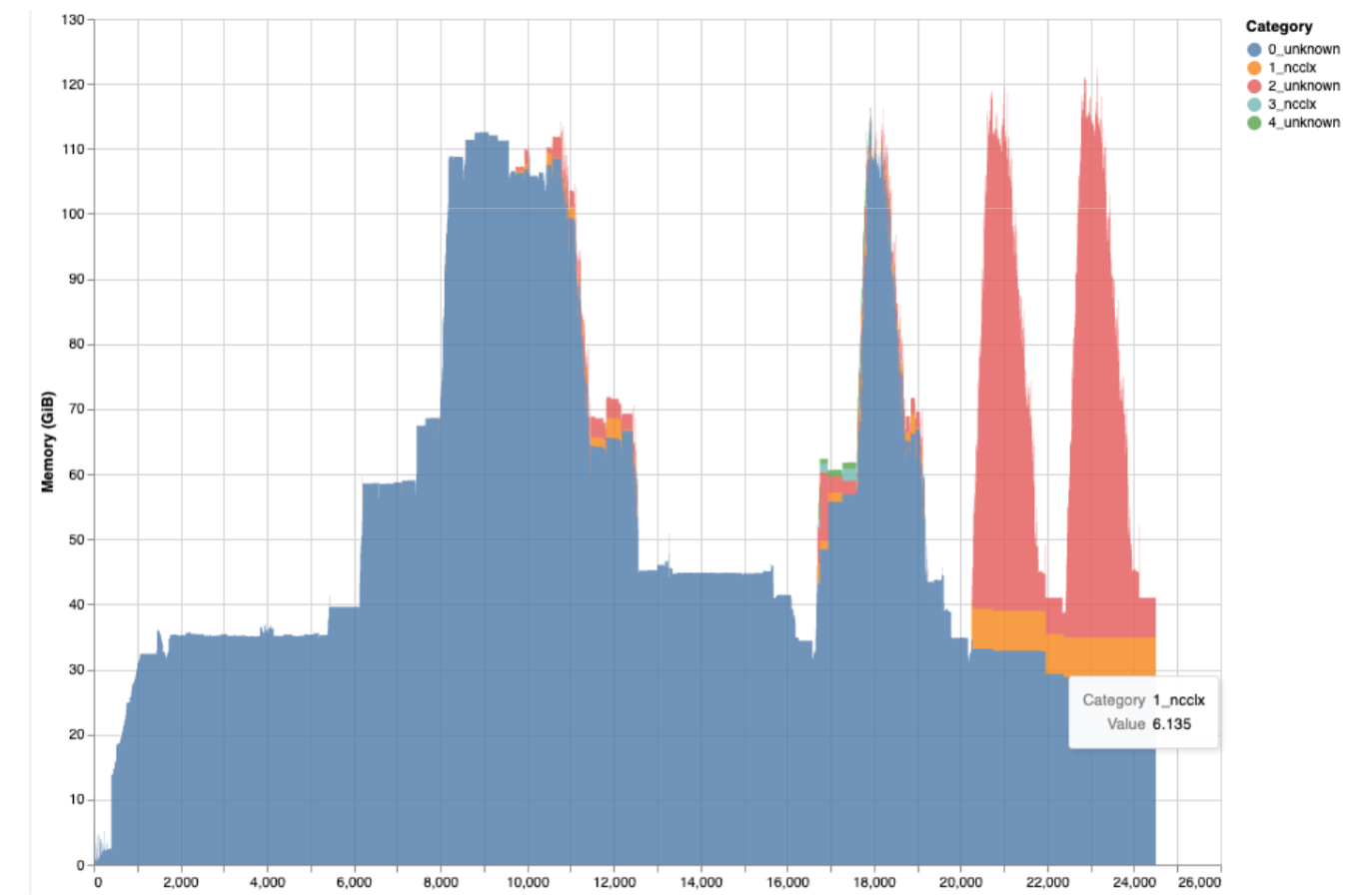

This is invaluable for tracking specific kernels, optimizers, or custom code patterns:

Custom profiling with regex patterns to track specific operations like NCCL communications#

Dependencies and Imports#

Let’s set up the required dependencies and imports for this tutorial.

import subprocess

import sys

import shutil

from contextlib import contextmanager

import pickle

# Fix for sphinx-gallery environment where __main__.__file__ may not exist

# This is needed for transformers library compatibility

import os

if not hasattr(sys.modules["__main__"], "__file__"):

# Use this file's path as a fallback, or a dummy path if __file__ is not available

try:

sys.modules["__main__"].__file__ = os.path.abspath(__file__)

except NameError:

# __file__ not available, use transformers modeling file as fallback

import transformers.modeling_utils

sys.modules["__main__"].__file__ = transformers.modeling_utils.__file__

import torch

from torch.utils.data import DataLoader, Dataset

# Install dependencies if needed

try:

from transformers import GPT2LMHeadModel, GPT2Tokenizer

from transformers.modeling_outputs import CausalLMOutputWithCrossAttentions

except ImportError:

subprocess.check_call(

[sys.executable, "-m", "pip", "install", "-q", "transformers"]

)

from transformers import GPT2LMHeadModel, GPT2Tokenizer

from transformers.modeling_outputs import CausalLMOutputWithCrossAttentions

try:

from mosaic.libmosaic.analyzer.memory_abstract import MemoryAbstract

except ImportError:

subprocess.check_call(

[

sys.executable,

"-m",

"pip",

"install",

"-q",

"git+https://github.com/facebookresearch/mosaic.git",

]

)

from mosaic.libmosaic.analyzer.memory_abstract import MemoryAbstract

print(f"PyTorch version: {torch.__version__}")

print(f"CUDA available: {torch.cuda.is_available()}")

if torch.cuda.is_available():

print(f"GPU: {torch.cuda.get_device_name(0)}")

PyTorch version: 2.10.0+cu128

CUDA available: True

GPU: NVIDIA A10G

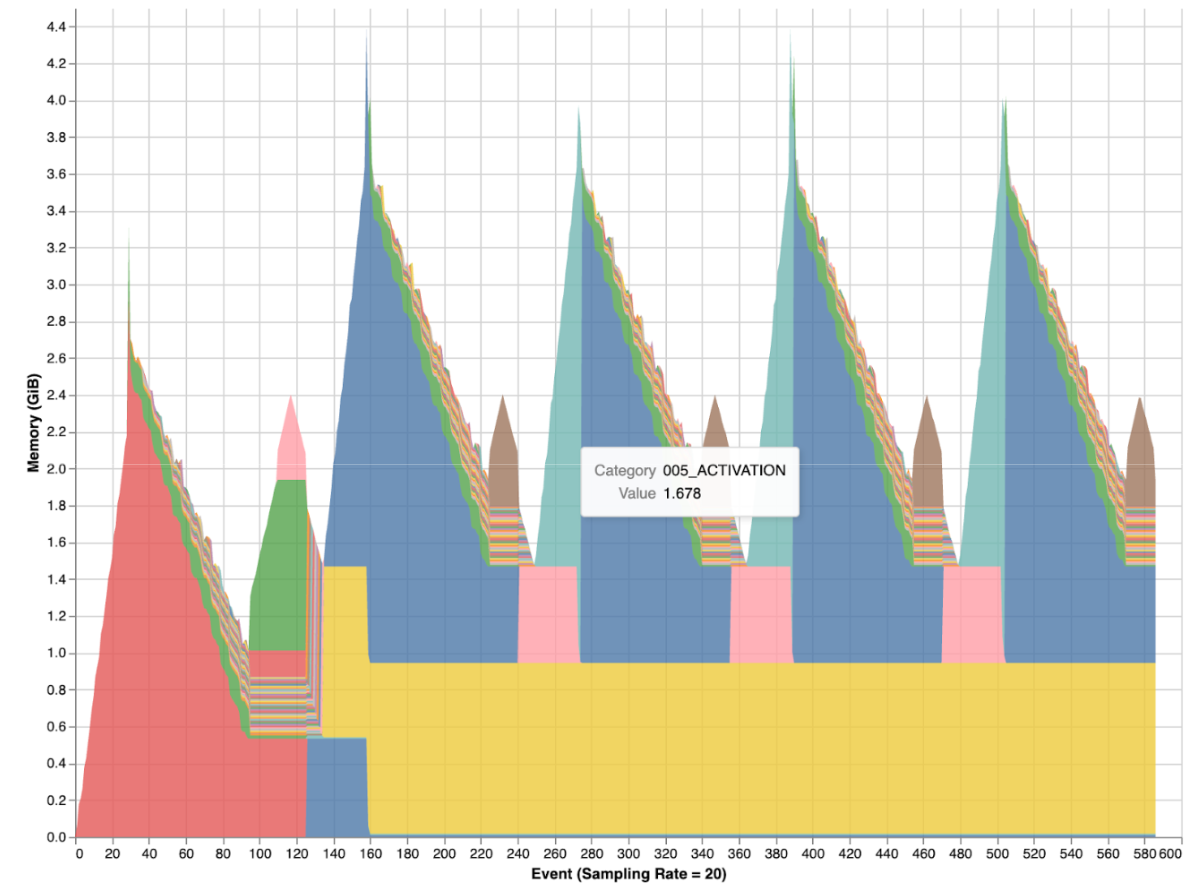

Case 1: Understanding Memory Differences with Activation Checkpointing#

This section demonstrates how to use Mosaic to analyze and compare GPU memory usage between different model configurations.

What we’ll do:

Train GPT-2 and capture a memory snapshot (baseline)

Enable activation checkpointing and train again (modified)

Use Mosaic to identify exactly where memory savings occur

Training Function for Activation Checkpointing Comparison#

def run_training_ac(

activation_checkpointing: bool,

snapshot_path: str,

batch_size: int = 4,

seq_length: int = 512,

num_steps: int = 5,

):

"""Run training loop and capture memory snapshot.

Args:

activation_checkpointing: Whether to enable gradient checkpointing.

snapshot_path: Path to save the memory snapshot.

batch_size: Training batch size.

seq_length: Sequence length for input tokens.

num_steps: Number of training steps to run.

Returns:

Peak GPU memory usage in GB.

"""

# Clear any previous memory

torch.cuda.empty_cache()

torch.cuda.reset_peak_memory_stats()

device = torch.device("cuda")

# Load model

print(f"Loading GPT-2 (activation_checkpointing={activation_checkpointing})...")

model = GPT2LMHeadModel.from_pretrained("gpt2")

if activation_checkpointing:

model.gradient_checkpointing_enable()

print("Activation checkpointing is ENABLED")

else:

print("Activation checkpointing is DISABLED")

model = model.to(device)

model.train()

# Create dataset and dataloader

tokenizer = GPT2Tokenizer.from_pretrained("gpt2")

dataset = RandomTokenDataset(

vocab_size=tokenizer.vocab_size,

seq_length=seq_length,

num_samples=100,

)

dataloader = DataLoader(dataset, batch_size=batch_size, shuffle=True)

# Setup optimizer

optimizer = torch.optim.AdamW(model.parameters(), lr=1e-5)

# Training loop with memory capture

print(f"Running {num_steps} training steps...")

with capture_memory_snapshot(snapshot_path):

for step, batch in enumerate(dataloader):

if step >= num_steps:

break

batch = {k: v.to(device) for k, v in batch.items()}

optimizer.zero_grad()

outputs = model(input_ids=batch["input_ids"], labels=batch["labels"])

loss = outputs.loss

loss.backward()

optimizer.step()

print(f" Step {step + 1}/{num_steps}, Loss: {loss.item():.4f}")

peak_memory_gb = torch.cuda.max_memory_allocated() / (1024**3)

print(f"✓ Peak GPU memory: {peak_memory_gb:.2f} GB")

# Cleanup

del model, optimizer

torch.cuda.empty_cache()

return peak_memory_gb

Run Baseline Training (Without Activation Checkpointing)#

Note

This tutorial requires a CUDA-capable GPU. If you’re running in Google Colab, make sure to select a GPU runtime: Runtime → Change runtime type → Hardware accelerator → GPU

if not torch.cuda.is_available():

print("=" * 60)

print("WARNING: No CUDA GPU detected!")

print("=" * 60)

print("\nThis tutorial requires a CUDA-capable GPU for memory profiling.")

print("\nIf you're running in Google Colab:")

print(" 1. Go to Runtime → Change runtime type")

print(" 2. Set Hardware accelerator to 'GPU'")

print(" 3. Click 'Save' and re-run the notebook")

print("\nSkipping GPU memory profiling examples...")

HAS_CUDA = False

else:

HAS_CUDA = True

# Check if Mosaic CLI is available

HAS_MOSAIC_CLI = shutil.which("mosaic_get_memory_profile") is not None

if HAS_CUDA and not HAS_MOSAIC_CLI:

print("Note: Mosaic CLI not found. Install Mosaic to generate HTML profiles.")

print(" pip install git+https://github.com/facebookresearch/mosaic.git")

if HAS_CUDA:

print("=" * 60)

print("BASELINE: Training WITHOUT Activation Checkpointing")

print("=" * 60)

baseline_memory = run_training_ac(

activation_checkpointing=False,

snapshot_path="snapshot_baseline.pickle",

batch_size=4,

seq_length=512,

num_steps=5,

)

============================================================

BASELINE: Training WITHOUT Activation Checkpointing

============================================================

Loading GPT-2 (activation_checkpointing=False)...

Loading weights: 0%| | 0/148 [00:00<?, ?it/s]

Loading weights: 1%| | 1/148 [00:00<00:00, 17697.49it/s, Materializing param=transformer.h.0.attn.c_attn.bias]

Loading weights: 1%| | 1/148 [00:00<00:00, 851.98it/s, Materializing param=transformer.h.0.attn.c_attn.bias]

Loading weights: 1%|▏ | 2/148 [00:00<00:00, 1356.94it/s, Materializing param=transformer.h.0.attn.c_attn.weight]

Loading weights: 1%|▏ | 2/148 [00:00<00:00, 1209.08it/s, Materializing param=transformer.h.0.attn.c_attn.weight]

Loading weights: 2%|▏ | 3/148 [00:00<00:00, 1430.20it/s, Materializing param=transformer.h.0.attn.c_proj.bias]

Loading weights: 2%|▏ | 3/148 [00:00<00:00, 1311.68it/s, Materializing param=transformer.h.0.attn.c_proj.bias]

Loading weights: 3%|▎ | 4/148 [00:00<00:00, 1497.16it/s, Materializing param=transformer.h.0.attn.c_proj.weight]

Loading weights: 3%|▎ | 4/148 [00:00<00:00, 1275.74it/s, Materializing param=transformer.h.0.attn.c_proj.weight]

Loading weights: 3%|▎ | 5/148 [00:00<00:00, 1116.63it/s, Materializing param=transformer.h.0.ln_1.bias]

Loading weights: 3%|▎ | 5/148 [00:00<00:00, 991.75it/s, Materializing param=transformer.h.0.ln_1.bias]

Loading weights: 4%|▍ | 6/148 [00:00<00:00, 1108.33it/s, Materializing param=transformer.h.0.ln_1.weight]

Loading weights: 4%|▍ | 6/148 [00:00<00:00, 838.72it/s, Materializing param=transformer.h.0.ln_1.weight]

Loading weights: 5%|▍ | 7/148 [00:00<00:00, 785.66it/s, Materializing param=transformer.h.0.ln_2.bias]

Loading weights: 5%|▍ | 7/148 [00:00<00:00, 769.23it/s, Materializing param=transformer.h.0.ln_2.bias]

Loading weights: 5%|▌ | 8/148 [00:00<00:00, 770.37it/s, Materializing param=transformer.h.0.ln_2.weight]

Loading weights: 5%|▌ | 8/148 [00:00<00:00, 741.37it/s, Materializing param=transformer.h.0.ln_2.weight]

Loading weights: 6%|▌ | 9/148 [00:00<00:00, 804.41it/s, Materializing param=transformer.h.0.mlp.c_fc.bias]

Loading weights: 6%|▌ | 9/148 [00:00<00:00, 761.32it/s, Materializing param=transformer.h.0.mlp.c_fc.bias]

Loading weights: 7%|▋ | 10/148 [00:00<00:00, 796.61it/s, Materializing param=transformer.h.0.mlp.c_fc.weight]

Loading weights: 7%|▋ | 10/148 [00:00<00:00, 772.92it/s, Materializing param=transformer.h.0.mlp.c_fc.weight]

Loading weights: 7%|▋ | 11/148 [00:00<00:00, 827.78it/s, Materializing param=transformer.h.0.mlp.c_proj.bias]

Loading weights: 7%|▋ | 11/148 [00:00<00:00, 797.42it/s, Materializing param=transformer.h.0.mlp.c_proj.bias]

Loading weights: 8%|▊ | 12/148 [00:00<00:00, 797.66it/s, Materializing param=transformer.h.0.mlp.c_proj.weight]

Loading weights: 8%|▊ | 12/148 [00:00<00:00, 787.29it/s, Materializing param=transformer.h.0.mlp.c_proj.weight]

Loading weights: 9%|▉ | 13/148 [00:00<00:00, 832.01it/s, Materializing param=transformer.h.1.attn.c_attn.bias]

Loading weights: 9%|▉ | 13/148 [00:00<00:00, 822.07it/s, Materializing param=transformer.h.1.attn.c_attn.bias]

Loading weights: 9%|▉ | 14/148 [00:00<00:00, 845.41it/s, Materializing param=transformer.h.1.attn.c_attn.weight]

Loading weights: 9%|▉ | 14/148 [00:00<00:00, 836.01it/s, Materializing param=transformer.h.1.attn.c_attn.weight]

Loading weights: 10%|█ | 15/148 [00:00<00:00, 878.69it/s, Materializing param=transformer.h.1.attn.c_proj.bias]

Loading weights: 10%|█ | 15/148 [00:00<00:00, 869.37it/s, Materializing param=transformer.h.1.attn.c_proj.bias]

Loading weights: 11%|█ | 16/148 [00:00<00:00, 912.66it/s, Materializing param=transformer.h.1.attn.c_proj.weight]

Loading weights: 11%|█ | 16/148 [00:00<00:00, 903.13it/s, Materializing param=transformer.h.1.attn.c_proj.weight]

Loading weights: 11%|█▏ | 17/148 [00:00<00:00, 942.45it/s, Materializing param=transformer.h.1.ln_1.bias]

Loading weights: 11%|█▏ | 17/148 [00:00<00:00, 924.21it/s, Materializing param=transformer.h.1.ln_1.bias]

Loading weights: 12%|█▏ | 18/148 [00:00<00:00, 963.22it/s, Materializing param=transformer.h.1.ln_1.weight]

Loading weights: 12%|█▏ | 18/148 [00:00<00:00, 954.06it/s, Materializing param=transformer.h.1.ln_1.weight]

Loading weights: 13%|█▎ | 19/148 [00:00<00:00, 990.49it/s, Materializing param=transformer.h.1.ln_2.bias]

Loading weights: 13%|█▎ | 19/148 [00:00<00:00, 918.47it/s, Materializing param=transformer.h.1.ln_2.bias]

Loading weights: 14%|█▎ | 20/148 [00:00<00:00, 934.40it/s, Materializing param=transformer.h.1.ln_2.weight]

Loading weights: 14%|█▎ | 20/148 [00:00<00:00, 919.17it/s, Materializing param=transformer.h.1.ln_2.weight]

Loading weights: 14%|█▍ | 21/148 [00:00<00:00, 942.90it/s, Materializing param=transformer.h.1.mlp.c_fc.bias]

Loading weights: 14%|█▍ | 21/148 [00:00<00:00, 935.02it/s, Materializing param=transformer.h.1.mlp.c_fc.bias]

Loading weights: 15%|█▍ | 22/148 [00:00<00:00, 964.08it/s, Materializing param=transformer.h.1.mlp.c_fc.weight]

Loading weights: 15%|█▍ | 22/148 [00:00<00:00, 926.73it/s, Materializing param=transformer.h.1.mlp.c_fc.weight]

Loading weights: 16%|█▌ | 23/148 [00:00<00:00, 948.76it/s, Materializing param=transformer.h.1.mlp.c_proj.bias]

Loading weights: 16%|█▌ | 23/148 [00:00<00:00, 931.56it/s, Materializing param=transformer.h.1.mlp.c_proj.bias]

Loading weights: 16%|█▌ | 24/148 [00:00<00:00, 940.59it/s, Materializing param=transformer.h.1.mlp.c_proj.weight]

Loading weights: 16%|█▌ | 24/148 [00:00<00:00, 901.23it/s, Materializing param=transformer.h.1.mlp.c_proj.weight]

Loading weights: 17%|█▋ | 25/148 [00:00<00:00, 894.73it/s, Materializing param=transformer.h.2.attn.c_attn.bias]

Loading weights: 17%|█▋ | 25/148 [00:00<00:00, 846.12it/s, Materializing param=transformer.h.2.attn.c_attn.bias]

Loading weights: 18%|█▊ | 26/148 [00:00<00:00, 856.22it/s, Materializing param=transformer.h.2.attn.c_attn.weight]

Loading weights: 18%|█▊ | 26/148 [00:00<00:00, 835.62it/s, Materializing param=transformer.h.2.attn.c_attn.weight]

Loading weights: 18%|█▊ | 27/148 [00:00<00:00, 853.49it/s, Materializing param=transformer.h.2.attn.c_proj.bias]

Loading weights: 18%|█▊ | 27/148 [00:00<00:00, 845.81it/s, Materializing param=transformer.h.2.attn.c_proj.bias]

Loading weights: 19%|█▉ | 28/148 [00:00<00:00, 856.65it/s, Materializing param=transformer.h.2.attn.c_proj.weight]

Loading weights: 19%|█▉ | 28/148 [00:00<00:00, 845.23it/s, Materializing param=transformer.h.2.attn.c_proj.weight]

Loading weights: 20%|█▉ | 29/148 [00:00<00:00, 841.19it/s, Materializing param=transformer.h.2.ln_1.bias]

Loading weights: 20%|█▉ | 29/148 [00:00<00:00, 824.40it/s, Materializing param=transformer.h.2.ln_1.bias]

Loading weights: 20%|██ | 30/148 [00:00<00:00, 834.21it/s, Materializing param=transformer.h.2.ln_1.weight]

Loading weights: 20%|██ | 30/148 [00:00<00:00, 826.99it/s, Materializing param=transformer.h.2.ln_1.weight]

Loading weights: 21%|██ | 31/148 [00:00<00:00, 834.66it/s, Materializing param=transformer.h.2.ln_2.bias]

Loading weights: 21%|██ | 31/148 [00:00<00:00, 814.75it/s, Materializing param=transformer.h.2.ln_2.bias]

Loading weights: 22%|██▏ | 32/148 [00:00<00:00, 823.87it/s, Materializing param=transformer.h.2.ln_2.weight]

Loading weights: 22%|██▏ | 32/148 [00:00<00:00, 806.07it/s, Materializing param=transformer.h.2.ln_2.weight]

Loading weights: 22%|██▏ | 33/148 [00:00<00:00, 815.03it/s, Materializing param=transformer.h.2.mlp.c_fc.bias]

Loading weights: 22%|██▏ | 33/148 [00:00<00:00, 807.10it/s, Materializing param=transformer.h.2.mlp.c_fc.bias]

Loading weights: 23%|██▎ | 34/148 [00:00<00:00, 812.84it/s, Materializing param=transformer.h.2.mlp.c_fc.weight]

Loading weights: 23%|██▎ | 34/148 [00:00<00:00, 799.35it/s, Materializing param=transformer.h.2.mlp.c_fc.weight]

Loading weights: 24%|██▎ | 35/148 [00:00<00:00, 806.86it/s, Materializing param=transformer.h.2.mlp.c_proj.bias]

Loading weights: 24%|██▎ | 35/148 [00:00<00:00, 790.85it/s, Materializing param=transformer.h.2.mlp.c_proj.bias]

Loading weights: 24%|██▍ | 36/148 [00:00<00:00, 802.72it/s, Materializing param=transformer.h.2.mlp.c_proj.weight]

Loading weights: 24%|██▍ | 36/148 [00:00<00:00, 785.14it/s, Materializing param=transformer.h.2.mlp.c_proj.weight]

Loading weights: 25%|██▌ | 37/148 [00:00<00:00, 797.11it/s, Materializing param=transformer.h.3.attn.c_attn.bias]

Loading weights: 25%|██▌ | 37/148 [00:00<00:00, 780.46it/s, Materializing param=transformer.h.3.attn.c_attn.bias]

Loading weights: 26%|██▌ | 38/148 [00:00<00:00, 789.22it/s, Materializing param=transformer.h.3.attn.c_attn.weight]

Loading weights: 26%|██▌ | 38/148 [00:00<00:00, 766.11it/s, Materializing param=transformer.h.3.attn.c_attn.weight]

Loading weights: 26%|██▋ | 39/148 [00:00<00:00, 778.23it/s, Materializing param=transformer.h.3.attn.c_proj.bias]

Loading weights: 26%|██▋ | 39/148 [00:00<00:00, 766.46it/s, Materializing param=transformer.h.3.attn.c_proj.bias]

Loading weights: 27%|██▋ | 40/148 [00:00<00:00, 780.18it/s, Materializing param=transformer.h.3.attn.c_proj.weight]

Loading weights: 27%|██▋ | 40/148 [00:00<00:00, 772.19it/s, Materializing param=transformer.h.3.attn.c_proj.weight]

Loading weights: 28%|██▊ | 41/148 [00:00<00:00, 786.62it/s, Materializing param=transformer.h.3.ln_1.bias]

Loading weights: 28%|██▊ | 41/148 [00:00<00:00, 782.86it/s, Materializing param=transformer.h.3.ln_1.bias]

Loading weights: 28%|██▊ | 42/148 [00:00<00:00, 775.49it/s, Materializing param=transformer.h.3.ln_1.weight]

Loading weights: 28%|██▊ | 42/148 [00:00<00:00, 771.29it/s, Materializing param=transformer.h.3.ln_1.weight]

Loading weights: 29%|██▉ | 43/148 [00:00<00:00, 781.85it/s, Materializing param=transformer.h.3.ln_2.bias]

Loading weights: 29%|██▉ | 43/148 [00:00<00:00, 776.58it/s, Materializing param=transformer.h.3.ln_2.bias]

Loading weights: 30%|██▉ | 44/148 [00:00<00:00, 790.65it/s, Materializing param=transformer.h.3.ln_2.weight]

Loading weights: 30%|██▉ | 44/148 [00:00<00:00, 788.05it/s, Materializing param=transformer.h.3.ln_2.weight]

Loading weights: 30%|███ | 45/148 [00:00<00:00, 802.40it/s, Materializing param=transformer.h.3.mlp.c_fc.bias]

Loading weights: 30%|███ | 45/148 [00:00<00:00, 799.91it/s, Materializing param=transformer.h.3.mlp.c_fc.bias]

Loading weights: 31%|███ | 46/148 [00:00<00:00, 814.09it/s, Materializing param=transformer.h.3.mlp.c_fc.weight]

Loading weights: 31%|███ | 46/148 [00:00<00:00, 811.54it/s, Materializing param=transformer.h.3.mlp.c_fc.weight]

Loading weights: 32%|███▏ | 47/148 [00:00<00:00, 825.45it/s, Materializing param=transformer.h.3.mlp.c_proj.bias]

Loading weights: 32%|███▏ | 47/148 [00:00<00:00, 822.61it/s, Materializing param=transformer.h.3.mlp.c_proj.bias]

Loading weights: 32%|███▏ | 48/148 [00:00<00:00, 836.08it/s, Materializing param=transformer.h.3.mlp.c_proj.weight]

Loading weights: 32%|███▏ | 48/148 [00:00<00:00, 833.34it/s, Materializing param=transformer.h.3.mlp.c_proj.weight]

Loading weights: 33%|███▎ | 49/148 [00:00<00:00, 846.63it/s, Materializing param=transformer.h.4.attn.c_attn.bias]

Loading weights: 33%|███▎ | 49/148 [00:00<00:00, 844.08it/s, Materializing param=transformer.h.4.attn.c_attn.bias]

Loading weights: 34%|███▍ | 50/148 [00:00<00:00, 857.33it/s, Materializing param=transformer.h.4.attn.c_attn.weight]

Loading weights: 34%|███▍ | 50/148 [00:00<00:00, 854.71it/s, Materializing param=transformer.h.4.attn.c_attn.weight]

Loading weights: 34%|███▍ | 51/148 [00:00<00:00, 867.66it/s, Materializing param=transformer.h.4.attn.c_proj.bias]

Loading weights: 34%|███▍ | 51/148 [00:00<00:00, 864.94it/s, Materializing param=transformer.h.4.attn.c_proj.bias]

Loading weights: 35%|███▌ | 52/148 [00:00<00:00, 877.76it/s, Materializing param=transformer.h.4.attn.c_proj.weight]

Loading weights: 35%|███▌ | 52/148 [00:00<00:00, 875.12it/s, Materializing param=transformer.h.4.attn.c_proj.weight]

Loading weights: 36%|███▌ | 53/148 [00:00<00:00, 887.67it/s, Materializing param=transformer.h.4.ln_1.bias]

Loading weights: 36%|███▌ | 53/148 [00:00<00:00, 884.97it/s, Materializing param=transformer.h.4.ln_1.bias]

Loading weights: 36%|███▋ | 54/148 [00:00<00:00, 897.58it/s, Materializing param=transformer.h.4.ln_1.weight]

Loading weights: 36%|███▋ | 54/148 [00:00<00:00, 894.91it/s, Materializing param=transformer.h.4.ln_1.weight]

Loading weights: 37%|███▋ | 55/148 [00:00<00:00, 907.41it/s, Materializing param=transformer.h.4.ln_2.bias]

Loading weights: 37%|███▋ | 55/148 [00:00<00:00, 904.76it/s, Materializing param=transformer.h.4.ln_2.bias]

Loading weights: 38%|███▊ | 56/148 [00:00<00:00, 917.24it/s, Materializing param=transformer.h.4.ln_2.weight]

Loading weights: 38%|███▊ | 56/148 [00:00<00:00, 914.47it/s, Materializing param=transformer.h.4.ln_2.weight]

Loading weights: 39%|███▊ | 57/148 [00:00<00:00, 926.69it/s, Materializing param=transformer.h.4.mlp.c_fc.bias]

Loading weights: 39%|███▊ | 57/148 [00:00<00:00, 924.08it/s, Materializing param=transformer.h.4.mlp.c_fc.bias]

Loading weights: 39%|███▉ | 58/148 [00:00<00:00, 936.40it/s, Materializing param=transformer.h.4.mlp.c_fc.weight]

Loading weights: 39%|███▉ | 58/148 [00:00<00:00, 933.72it/s, Materializing param=transformer.h.4.mlp.c_fc.weight]

Loading weights: 40%|███▉ | 59/148 [00:00<00:00, 945.76it/s, Materializing param=transformer.h.4.mlp.c_proj.bias]

Loading weights: 40%|███▉ | 59/148 [00:00<00:00, 943.15it/s, Materializing param=transformer.h.4.mlp.c_proj.bias]

Loading weights: 41%|████ | 60/148 [00:00<00:00, 955.26it/s, Materializing param=transformer.h.4.mlp.c_proj.weight]

Loading weights: 41%|████ | 60/148 [00:00<00:00, 952.58it/s, Materializing param=transformer.h.4.mlp.c_proj.weight]

Loading weights: 41%|████ | 61/148 [00:00<00:00, 964.57it/s, Materializing param=transformer.h.5.attn.c_attn.bias]

Loading weights: 41%|████ | 61/148 [00:00<00:00, 961.92it/s, Materializing param=transformer.h.5.attn.c_attn.bias]

Loading weights: 42%|████▏ | 62/148 [00:00<00:00, 973.35it/s, Materializing param=transformer.h.5.attn.c_attn.weight]

Loading weights: 42%|████▏ | 62/148 [00:00<00:00, 970.69it/s, Materializing param=transformer.h.5.attn.c_attn.weight]

Loading weights: 43%|████▎ | 63/148 [00:00<00:00, 982.41it/s, Materializing param=transformer.h.5.attn.c_proj.bias]

Loading weights: 43%|████▎ | 63/148 [00:00<00:00, 979.73it/s, Materializing param=transformer.h.5.attn.c_proj.bias]

Loading weights: 43%|████▎ | 64/148 [00:00<00:00, 991.35it/s, Materializing param=transformer.h.5.attn.c_proj.weight]

Loading weights: 43%|████▎ | 64/148 [00:00<00:00, 988.67it/s, Materializing param=transformer.h.5.attn.c_proj.weight]

Loading weights: 44%|████▍ | 65/148 [00:00<00:00, 1000.19it/s, Materializing param=transformer.h.5.ln_1.bias]

Loading weights: 44%|████▍ | 65/148 [00:00<00:00, 997.50it/s, Materializing param=transformer.h.5.ln_1.bias]

Loading weights: 45%|████▍ | 66/148 [00:00<00:00, 1008.93it/s, Materializing param=transformer.h.5.ln_1.weight]

Loading weights: 45%|████▍ | 66/148 [00:00<00:00, 1006.21it/s, Materializing param=transformer.h.5.ln_1.weight]

Loading weights: 45%|████▌ | 67/148 [00:00<00:00, 1017.53it/s, Materializing param=transformer.h.5.ln_2.bias]

Loading weights: 45%|████▌ | 67/148 [00:00<00:00, 1014.82it/s, Materializing param=transformer.h.5.ln_2.bias]

Loading weights: 46%|████▌ | 68/148 [00:00<00:00, 1025.83it/s, Materializing param=transformer.h.5.ln_2.weight]

Loading weights: 46%|████▌ | 68/148 [00:00<00:00, 1023.13it/s, Materializing param=transformer.h.5.ln_2.weight]

Loading weights: 47%|████▋ | 69/148 [00:00<00:00, 1034.14it/s, Materializing param=transformer.h.5.mlp.c_fc.bias]

Loading weights: 47%|████▋ | 69/148 [00:00<00:00, 1031.46it/s, Materializing param=transformer.h.5.mlp.c_fc.bias]

Loading weights: 47%|████▋ | 70/148 [00:00<00:00, 1042.43it/s, Materializing param=transformer.h.5.mlp.c_fc.weight]

Loading weights: 47%|████▋ | 70/148 [00:00<00:00, 1039.72it/s, Materializing param=transformer.h.5.mlp.c_fc.weight]

Loading weights: 48%|████▊ | 71/148 [00:00<00:00, 1050.60it/s, Materializing param=transformer.h.5.mlp.c_proj.bias]

Loading weights: 48%|████▊ | 71/148 [00:00<00:00, 1047.89it/s, Materializing param=transformer.h.5.mlp.c_proj.bias]

Loading weights: 49%|████▊ | 72/148 [00:00<00:00, 1058.68it/s, Materializing param=transformer.h.5.mlp.c_proj.weight]

Loading weights: 49%|████▊ | 72/148 [00:00<00:00, 1055.96it/s, Materializing param=transformer.h.5.mlp.c_proj.weight]

Loading weights: 49%|████▉ | 73/148 [00:00<00:00, 1066.64it/s, Materializing param=transformer.h.6.attn.c_attn.bias]

Loading weights: 49%|████▉ | 73/148 [00:00<00:00, 1063.87it/s, Materializing param=transformer.h.6.attn.c_attn.bias]

Loading weights: 50%|█████ | 74/148 [00:00<00:00, 1074.45it/s, Materializing param=transformer.h.6.attn.c_attn.weight]

Loading weights: 50%|█████ | 74/148 [00:00<00:00, 1071.75it/s, Materializing param=transformer.h.6.attn.c_attn.weight]

Loading weights: 51%|█████ | 75/148 [00:00<00:00, 1082.22it/s, Materializing param=transformer.h.6.attn.c_proj.bias]

Loading weights: 51%|█████ | 75/148 [00:00<00:00, 1079.49it/s, Materializing param=transformer.h.6.attn.c_proj.bias]

Loading weights: 51%|█████▏ | 76/148 [00:00<00:00, 1089.88it/s, Materializing param=transformer.h.6.attn.c_proj.weight]

Loading weights: 51%|█████▏ | 76/148 [00:00<00:00, 1087.14it/s, Materializing param=transformer.h.6.attn.c_proj.weight]

Loading weights: 52%|█████▏ | 77/148 [00:00<00:00, 1097.61it/s, Materializing param=transformer.h.6.ln_1.bias]

Loading weights: 52%|█████▏ | 77/148 [00:00<00:00, 1094.94it/s, Materializing param=transformer.h.6.ln_1.bias]

Loading weights: 53%|█████▎ | 78/148 [00:00<00:00, 1105.80it/s, Materializing param=transformer.h.6.ln_1.weight]

Loading weights: 53%|█████▎ | 78/148 [00:00<00:00, 1102.88it/s, Materializing param=transformer.h.6.ln_1.weight]

Loading weights: 53%|█████▎ | 79/148 [00:00<00:00, 1113.14it/s, Materializing param=transformer.h.6.ln_2.bias]

Loading weights: 53%|█████▎ | 79/148 [00:00<00:00, 1110.30it/s, Materializing param=transformer.h.6.ln_2.bias]

Loading weights: 54%|█████▍ | 80/148 [00:00<00:00, 1120.69it/s, Materializing param=transformer.h.6.ln_2.weight]

Loading weights: 54%|█████▍ | 80/148 [00:00<00:00, 1117.99it/s, Materializing param=transformer.h.6.ln_2.weight]

Loading weights: 55%|█████▍ | 81/148 [00:00<00:00, 1128.26it/s, Materializing param=transformer.h.6.mlp.c_fc.bias]

Loading weights: 55%|█████▍ | 81/148 [00:00<00:00, 1125.43it/s, Materializing param=transformer.h.6.mlp.c_fc.bias]

Loading weights: 55%|█████▌ | 82/148 [00:00<00:00, 1135.72it/s, Materializing param=transformer.h.6.mlp.c_fc.weight]

Loading weights: 55%|█████▌ | 82/148 [00:00<00:00, 1132.88it/s, Materializing param=transformer.h.6.mlp.c_fc.weight]

Loading weights: 56%|█████▌ | 83/148 [00:00<00:00, 1143.10it/s, Materializing param=transformer.h.6.mlp.c_proj.bias]

Loading weights: 56%|█████▌ | 83/148 [00:00<00:00, 1140.45it/s, Materializing param=transformer.h.6.mlp.c_proj.bias]

Loading weights: 57%|█████▋ | 84/148 [00:00<00:00, 1150.48it/s, Materializing param=transformer.h.6.mlp.c_proj.weight]

Loading weights: 57%|█████▋ | 84/148 [00:00<00:00, 1147.69it/s, Materializing param=transformer.h.6.mlp.c_proj.weight]

Loading weights: 57%|█████▋ | 85/148 [00:00<00:00, 1157.77it/s, Materializing param=transformer.h.7.attn.c_attn.bias]

Loading weights: 57%|█████▋ | 85/148 [00:00<00:00, 1154.70it/s, Materializing param=transformer.h.7.attn.c_attn.bias]

Loading weights: 58%|█████▊ | 86/148 [00:00<00:00, 1164.68it/s, Materializing param=transformer.h.7.attn.c_attn.weight]

Loading weights: 58%|█████▊ | 86/148 [00:00<00:00, 1161.89it/s, Materializing param=transformer.h.7.attn.c_attn.weight]

Loading weights: 59%|█████▉ | 87/148 [00:00<00:00, 1171.61it/s, Materializing param=transformer.h.7.attn.c_proj.bias]

Loading weights: 59%|█████▉ | 87/148 [00:00<00:00, 1168.87it/s, Materializing param=transformer.h.7.attn.c_proj.bias]

Loading weights: 59%|█████▉ | 88/148 [00:00<00:00, 1178.61it/s, Materializing param=transformer.h.7.attn.c_proj.weight]

Loading weights: 59%|█████▉ | 88/148 [00:00<00:00, 1175.91it/s, Materializing param=transformer.h.7.attn.c_proj.weight]

Loading weights: 60%|██████ | 89/148 [00:00<00:00, 1185.67it/s, Materializing param=transformer.h.7.ln_1.bias]

Loading weights: 60%|██████ | 89/148 [00:00<00:00, 1182.95it/s, Materializing param=transformer.h.7.ln_1.bias]

Loading weights: 61%|██████ | 90/148 [00:00<00:00, 1192.63it/s, Materializing param=transformer.h.7.ln_1.weight]

Loading weights: 61%|██████ | 90/148 [00:00<00:00, 1189.94it/s, Materializing param=transformer.h.7.ln_1.weight]

Loading weights: 61%|██████▏ | 91/148 [00:00<00:00, 1199.57it/s, Materializing param=transformer.h.7.ln_2.bias]

Loading weights: 61%|██████▏ | 91/148 [00:00<00:00, 1196.90it/s, Materializing param=transformer.h.7.ln_2.bias]

Loading weights: 62%|██████▏ | 92/148 [00:00<00:00, 1206.51it/s, Materializing param=transformer.h.7.ln_2.weight]

Loading weights: 62%|██████▏ | 92/148 [00:00<00:00, 1203.84it/s, Materializing param=transformer.h.7.ln_2.weight]

Loading weights: 63%|██████▎ | 93/148 [00:00<00:00, 1213.32it/s, Materializing param=transformer.h.7.mlp.c_fc.bias]

Loading weights: 63%|██████▎ | 93/148 [00:00<00:00, 1210.64it/s, Materializing param=transformer.h.7.mlp.c_fc.bias]

Loading weights: 64%|██████▎ | 94/148 [00:00<00:00, 1220.03it/s, Materializing param=transformer.h.7.mlp.c_fc.weight]

Loading weights: 64%|██████▎ | 94/148 [00:00<00:00, 1217.32it/s, Materializing param=transformer.h.7.mlp.c_fc.weight]

Loading weights: 64%|██████▍ | 95/148 [00:00<00:00, 1226.61it/s, Materializing param=transformer.h.7.mlp.c_proj.bias]

Loading weights: 64%|██████▍ | 95/148 [00:00<00:00, 1223.94it/s, Materializing param=transformer.h.7.mlp.c_proj.bias]

Loading weights: 65%|██████▍ | 96/148 [00:00<00:00, 1233.22it/s, Materializing param=transformer.h.7.mlp.c_proj.weight]

Loading weights: 65%|██████▍ | 96/148 [00:00<00:00, 1230.42it/s, Materializing param=transformer.h.7.mlp.c_proj.weight]

Loading weights: 66%|██████▌ | 97/148 [00:00<00:00, 1239.20it/s, Materializing param=transformer.h.8.attn.c_attn.bias]

Loading weights: 66%|██████▌ | 97/148 [00:00<00:00, 1236.36it/s, Materializing param=transformer.h.8.attn.c_attn.bias]

Loading weights: 66%|██████▌ | 98/148 [00:00<00:00, 1245.17it/s, Materializing param=transformer.h.8.attn.c_attn.weight]

Loading weights: 66%|██████▌ | 98/148 [00:00<00:00, 1242.20it/s, Materializing param=transformer.h.8.attn.c_attn.weight]

Loading weights: 67%|██████▋ | 99/148 [00:00<00:00, 1250.87it/s, Materializing param=transformer.h.8.attn.c_proj.bias]

Loading weights: 67%|██████▋ | 99/148 [00:00<00:00, 1248.17it/s, Materializing param=transformer.h.8.attn.c_proj.bias]

Loading weights: 68%|██████▊ | 100/148 [00:00<00:00, 1256.54it/s, Materializing param=transformer.h.8.attn.c_proj.weight]

Loading weights: 68%|██████▊ | 100/148 [00:00<00:00, 1253.75it/s, Materializing param=transformer.h.8.attn.c_proj.weight]

Loading weights: 68%|██████▊ | 101/148 [00:00<00:00, 1262.37it/s, Materializing param=transformer.h.8.ln_1.bias]

Loading weights: 68%|██████▊ | 101/148 [00:00<00:00, 1259.68it/s, Materializing param=transformer.h.8.ln_1.bias]

Loading weights: 69%|██████▉ | 102/148 [00:00<00:00, 1268.25it/s, Materializing param=transformer.h.8.ln_1.weight]

Loading weights: 69%|██████▉ | 102/148 [00:00<00:00, 1265.48it/s, Materializing param=transformer.h.8.ln_1.weight]

Loading weights: 70%|██████▉ | 103/148 [00:00<00:00, 1274.17it/s, Materializing param=transformer.h.8.ln_2.bias]

Loading weights: 70%|██████▉ | 103/148 [00:00<00:00, 1271.28it/s, Materializing param=transformer.h.8.ln_2.bias]

Loading weights: 70%|███████ | 104/148 [00:00<00:00, 1279.97it/s, Materializing param=transformer.h.8.ln_2.weight]

Loading weights: 70%|███████ | 104/148 [00:00<00:00, 1277.00it/s, Materializing param=transformer.h.8.ln_2.weight]

Loading weights: 71%|███████ | 105/148 [00:00<00:00, 1285.18it/s, Materializing param=transformer.h.8.mlp.c_fc.bias]

Loading weights: 71%|███████ | 105/148 [00:00<00:00, 1282.33it/s, Materializing param=transformer.h.8.mlp.c_fc.bias]

Loading weights: 72%|███████▏ | 106/148 [00:00<00:00, 1290.46it/s, Materializing param=transformer.h.8.mlp.c_fc.weight]

Loading weights: 72%|███████▏ | 106/148 [00:00<00:00, 1287.48it/s, Materializing param=transformer.h.8.mlp.c_fc.weight]

Loading weights: 72%|███████▏ | 107/148 [00:00<00:00, 1295.58it/s, Materializing param=transformer.h.8.mlp.c_proj.bias]

Loading weights: 72%|███████▏ | 107/148 [00:00<00:00, 1292.79it/s, Materializing param=transformer.h.8.mlp.c_proj.bias]

Loading weights: 73%|███████▎ | 108/148 [00:00<00:00, 1300.97it/s, Materializing param=transformer.h.8.mlp.c_proj.weight]

Loading weights: 73%|███████▎ | 108/148 [00:00<00:00, 1298.23it/s, Materializing param=transformer.h.8.mlp.c_proj.weight]

Loading weights: 74%|███████▎ | 109/148 [00:00<00:00, 1306.44it/s, Materializing param=transformer.h.9.attn.c_attn.bias]

Loading weights: 74%|███████▎ | 109/148 [00:00<00:00, 1303.42it/s, Materializing param=transformer.h.9.attn.c_attn.bias]

Loading weights: 74%|███████▍ | 110/148 [00:00<00:00, 1311.29it/s, Materializing param=transformer.h.9.attn.c_attn.weight]

Loading weights: 74%|███████▍ | 110/148 [00:00<00:00, 1308.72it/s, Materializing param=transformer.h.9.attn.c_attn.weight]

Loading weights: 75%|███████▌ | 111/148 [00:00<00:00, 1317.02it/s, Materializing param=transformer.h.9.attn.c_proj.bias]

Loading weights: 75%|███████▌ | 111/148 [00:00<00:00, 1314.58it/s, Materializing param=transformer.h.9.attn.c_proj.bias]

Loading weights: 76%|███████▌ | 112/148 [00:00<00:00, 1322.71it/s, Materializing param=transformer.h.9.attn.c_proj.weight]

Loading weights: 76%|███████▌ | 112/148 [00:00<00:00, 1319.86it/s, Materializing param=transformer.h.9.attn.c_proj.weight]

Loading weights: 76%|███████▋ | 113/148 [00:00<00:00, 1327.53it/s, Materializing param=transformer.h.9.ln_1.bias]

Loading weights: 76%|███████▋ | 113/148 [00:00<00:00, 1324.93it/s, Materializing param=transformer.h.9.ln_1.bias]

Loading weights: 77%|███████▋ | 114/148 [00:00<00:00, 1332.74it/s, Materializing param=transformer.h.9.ln_1.weight]

Loading weights: 77%|███████▋ | 114/148 [00:00<00:00, 1329.80it/s, Materializing param=transformer.h.9.ln_1.weight]

Loading weights: 78%|███████▊ | 115/148 [00:00<00:00, 1337.64it/s, Materializing param=transformer.h.9.ln_2.bias]

Loading weights: 78%|███████▊ | 115/148 [00:00<00:00, 1334.94it/s, Materializing param=transformer.h.9.ln_2.bias]

Loading weights: 78%|███████▊ | 116/148 [00:00<00:00, 1342.74it/s, Materializing param=transformer.h.9.ln_2.weight]

Loading weights: 78%|███████▊ | 116/148 [00:00<00:00, 1339.80it/s, Materializing param=transformer.h.9.ln_2.weight]

Loading weights: 79%|███████▉ | 117/148 [00:00<00:00, 1347.54it/s, Materializing param=transformer.h.9.mlp.c_fc.bias]

Loading weights: 79%|███████▉ | 117/148 [00:00<00:00, 1344.80it/s, Materializing param=transformer.h.9.mlp.c_fc.bias]

Loading weights: 80%|███████▉ | 118/148 [00:00<00:00, 1352.57it/s, Materializing param=transformer.h.9.mlp.c_fc.weight]

Loading weights: 80%|███████▉ | 118/148 [00:00<00:00, 1349.71it/s, Materializing param=transformer.h.9.mlp.c_fc.weight]

Loading weights: 80%|████████ | 119/148 [00:00<00:00, 1357.32it/s, Materializing param=transformer.h.9.mlp.c_proj.bias]

Loading weights: 80%|████████ | 119/148 [00:00<00:00, 1354.43it/s, Materializing param=transformer.h.9.mlp.c_proj.bias]

Loading weights: 81%|████████ | 120/148 [00:00<00:00, 1362.07it/s, Materializing param=transformer.h.9.mlp.c_proj.weight]

Loading weights: 81%|████████ | 120/148 [00:00<00:00, 1359.50it/s, Materializing param=transformer.h.9.mlp.c_proj.weight]

Loading weights: 82%|████████▏ | 121/148 [00:00<00:00, 1367.20it/s, Materializing param=transformer.h.10.attn.c_attn.bias]

Loading weights: 82%|████████▏ | 121/148 [00:00<00:00, 1364.43it/s, Materializing param=transformer.h.10.attn.c_attn.bias]

Loading weights: 82%|████████▏ | 122/148 [00:00<00:00, 1371.68it/s, Materializing param=transformer.h.10.attn.c_attn.weight]

Loading weights: 82%|████████▏ | 122/148 [00:00<00:00, 1369.04it/s, Materializing param=transformer.h.10.attn.c_attn.weight]

Loading weights: 83%|████████▎ | 123/148 [00:00<00:00, 1376.52it/s, Materializing param=transformer.h.10.attn.c_proj.bias]

Loading weights: 83%|████████▎ | 123/148 [00:00<00:00, 1373.73it/s, Materializing param=transformer.h.10.attn.c_proj.bias]

Loading weights: 84%|████████▍ | 124/148 [00:00<00:00, 1380.85it/s, Materializing param=transformer.h.10.attn.c_proj.weight]

Loading weights: 84%|████████▍ | 124/148 [00:00<00:00, 1377.98it/s, Materializing param=transformer.h.10.attn.c_proj.weight]

Loading weights: 84%|████████▍ | 125/148 [00:00<00:00, 1385.20it/s, Materializing param=transformer.h.10.ln_1.bias]

Loading weights: 84%|████████▍ | 125/148 [00:00<00:00, 1382.15it/s, Materializing param=transformer.h.10.ln_1.bias]

Loading weights: 85%|████████▌ | 126/148 [00:00<00:00, 1389.32it/s, Materializing param=transformer.h.10.ln_1.weight]

Loading weights: 85%|████████▌ | 126/148 [00:00<00:00, 1386.64it/s, Materializing param=transformer.h.10.ln_1.weight]

Loading weights: 86%|████████▌ | 127/148 [00:00<00:00, 1394.06it/s, Materializing param=transformer.h.10.ln_2.bias]

Loading weights: 86%|████████▌ | 127/148 [00:00<00:00, 1391.31it/s, Materializing param=transformer.h.10.ln_2.bias]

Loading weights: 86%|████████▋ | 128/148 [00:00<00:00, 1398.65it/s, Materializing param=transformer.h.10.ln_2.weight]

Loading weights: 86%|████████▋ | 128/148 [00:00<00:00, 1395.93it/s, Materializing param=transformer.h.10.ln_2.weight]

Loading weights: 87%|████████▋ | 129/148 [00:00<00:00, 1403.17it/s, Materializing param=transformer.h.10.mlp.c_fc.bias]

Loading weights: 87%|████████▋ | 129/148 [00:00<00:00, 1400.40it/s, Materializing param=transformer.h.10.mlp.c_fc.bias]

Loading weights: 88%|████████▊ | 130/148 [00:00<00:00, 1407.22it/s, Materializing param=transformer.h.10.mlp.c_fc.weight]

Loading weights: 88%|████████▊ | 130/148 [00:00<00:00, 1404.40it/s, Materializing param=transformer.h.10.mlp.c_fc.weight]

Loading weights: 89%|████████▊ | 131/148 [00:00<00:00, 1411.61it/s, Materializing param=transformer.h.10.mlp.c_proj.bias]

Loading weights: 89%|████████▊ | 131/148 [00:00<00:00, 1408.89it/s, Materializing param=transformer.h.10.mlp.c_proj.bias]

Loading weights: 89%|████████▉ | 132/148 [00:00<00:00, 1416.08it/s, Materializing param=transformer.h.10.mlp.c_proj.weight]

Loading weights: 89%|████████▉ | 132/148 [00:00<00:00, 1413.42it/s, Materializing param=transformer.h.10.mlp.c_proj.weight]

Loading weights: 90%|████████▉ | 133/148 [00:00<00:00, 1419.99it/s, Materializing param=transformer.h.11.attn.c_attn.bias]

Loading weights: 90%|████████▉ | 133/148 [00:00<00:00, 1416.92it/s, Materializing param=transformer.h.11.attn.c_attn.bias]

Loading weights: 91%|█████████ | 134/148 [00:00<00:00, 1423.63it/s, Materializing param=transformer.h.11.attn.c_attn.weight]

Loading weights: 91%|█████████ | 134/148 [00:00<00:00, 1420.82it/s, Materializing param=transformer.h.11.attn.c_attn.weight]

Loading weights: 91%|█████████ | 135/148 [00:00<00:00, 1427.27it/s, Materializing param=transformer.h.11.attn.c_proj.bias]

Loading weights: 91%|█████████ | 135/148 [00:00<00:00, 1424.19it/s, Materializing param=transformer.h.11.attn.c_proj.bias]

Loading weights: 92%|█████████▏| 136/148 [00:00<00:00, 1430.69it/s, Materializing param=transformer.h.11.attn.c_proj.weight]

Loading weights: 92%|█████████▏| 136/148 [00:00<00:00, 1427.81it/s, Materializing param=transformer.h.11.attn.c_proj.weight]

Loading weights: 93%|█████████▎| 137/148 [00:00<00:00, 1434.29it/s, Materializing param=transformer.h.11.ln_1.bias]

Loading weights: 93%|█████████▎| 137/148 [00:00<00:00, 1431.62it/s, Materializing param=transformer.h.11.ln_1.bias]

Loading weights: 93%|█████████▎| 138/148 [00:00<00:00, 1438.20it/s, Materializing param=transformer.h.11.ln_1.weight]

Loading weights: 93%|█████████▎| 138/148 [00:00<00:00, 1435.49it/s, Materializing param=transformer.h.11.ln_1.weight]

Loading weights: 94%|█████████▍| 139/148 [00:00<00:00, 1442.04it/s, Materializing param=transformer.h.11.ln_2.bias]

Loading weights: 94%|█████████▍| 139/148 [00:00<00:00, 1439.35it/s, Materializing param=transformer.h.11.ln_2.bias]

Loading weights: 95%|█████████▍| 140/148 [00:00<00:00, 1445.81it/s, Materializing param=transformer.h.11.ln_2.weight]

Loading weights: 95%|█████████▍| 140/148 [00:00<00:00, 1443.16it/s, Materializing param=transformer.h.11.ln_2.weight]

Loading weights: 95%|█████████▌| 141/148 [00:00<00:00, 1449.63it/s, Materializing param=transformer.h.11.mlp.c_fc.bias]

Loading weights: 95%|█████████▌| 141/148 [00:00<00:00, 1447.04it/s, Materializing param=transformer.h.11.mlp.c_fc.bias]

Loading weights: 96%|█████████▌| 142/148 [00:00<00:00, 1453.32it/s, Materializing param=transformer.h.11.mlp.c_fc.weight]

Loading weights: 96%|█████████▌| 142/148 [00:00<00:00, 1450.74it/s, Materializing param=transformer.h.11.mlp.c_fc.weight]

Loading weights: 97%|█████████▋| 143/148 [00:00<00:00, 1457.01it/s, Materializing param=transformer.h.11.mlp.c_proj.bias]

Loading weights: 97%|█████████▋| 143/148 [00:00<00:00, 1454.21it/s, Materializing param=transformer.h.11.mlp.c_proj.bias]

Loading weights: 97%|█████████▋| 144/148 [00:00<00:00, 1460.41it/s, Materializing param=transformer.h.11.mlp.c_proj.weight]

Loading weights: 97%|█████████▋| 144/148 [00:00<00:00, 1457.79it/s, Materializing param=transformer.h.11.mlp.c_proj.weight]

Loading weights: 98%|█████████▊| 145/148 [00:00<00:00, 1463.96it/s, Materializing param=transformer.ln_f.bias]

Loading weights: 98%|█████████▊| 145/148 [00:00<00:00, 1461.41it/s, Materializing param=transformer.ln_f.bias]

Loading weights: 99%|█████████▊| 146/148 [00:00<00:00, 1467.76it/s, Materializing param=transformer.ln_f.weight]

Loading weights: 99%|█████████▊| 146/148 [00:00<00:00, 1465.12it/s, Materializing param=transformer.ln_f.weight]

Loading weights: 99%|█████████▉| 147/148 [00:00<00:00, 1471.42it/s, Materializing param=transformer.wpe.weight]

Loading weights: 99%|█████████▉| 147/148 [00:00<00:00, 1468.79it/s, Materializing param=transformer.wpe.weight]

Loading weights: 100%|██████████| 148/148 [00:00<00:00, 1475.22it/s, Materializing param=transformer.wpe.weight]

Loading weights: 100%|██████████| 148/148 [00:00<00:00, 1475.22it/s, Materializing param=transformer.wte.weight]

Loading weights: 100%|██████████| 148/148 [00:00<00:00, 1475.22it/s, Materializing param=transformer.wte.weight]

Loading weights: 100%|██████████| 148/148 [00:00<00:00, 1465.58it/s, Materializing param=transformer.wte.weight]

GPT2LMHeadModel LOAD REPORT from: gpt2

Key | Status | |

---------------------+------------+--+-

h.{0...11}.attn.bias | UNEXPECTED | |

Notes:

- UNEXPECTED :can be ignored when loading from different task/architecture; not ok if you expect identical arch.

Warning: You are sending unauthenticated requests to the HF Hub. Please set a HF_TOKEN to enable higher rate limits and faster downloads.

Activation checkpointing is DISABLED

Running 5 training steps...

`loss_type=None` was set in the config but it is unrecognized. Using the default loss: `ForCausalLMLoss`.

Step 1/5, Loss: 12.2627

Step 2/5, Loss: 12.1125

Step 3/5, Loss: 11.8959

Step 4/5, Loss: 11.7933

Step 5/5, Loss: 11.7729

✓ Memory snapshot saved to snapshot_baseline.pickle

✓ Peak GPU memory: 5.12 GB

Run Modified Training (With Activation Checkpointing)#

if HAS_CUDA:

print("\n" + "=" * 60)

print("MODIFIED: Training WITH Activation Checkpointing")

print("=" * 60)

ac_memory = run_training_ac(

activation_checkpointing=True,

snapshot_path="snapshot_with_ac.pickle",

batch_size=4,

seq_length=512,

num_steps=5,

)

# Summary

print("\n" + "=" * 60)

print("MEMORY COMPARISON SUMMARY")

print("=" * 60)

print(f"Baseline (no AC): {baseline_memory:.2f} GB")

print(f"With AC: {ac_memory:.2f} GB")

if baseline_memory > 0:

saved_pct = 100 * (baseline_memory - ac_memory) / baseline_memory

print(

f"Memory Saved: {baseline_memory - ac_memory:.2f} GB ({saved_pct:.1f}%)"

)

============================================================

MODIFIED: Training WITH Activation Checkpointing

============================================================

Loading GPT-2 (activation_checkpointing=True)...

Loading weights: 0%| | 0/148 [00:00<?, ?it/s]

Loading weights: 1%| | 1/148 [00:00<00:00, 45100.04it/s, Materializing param=transformer.h.0.attn.c_attn.bias]

Loading weights: 1%| | 1/148 [00:00<00:00, 4136.39it/s, Materializing param=transformer.h.0.attn.c_attn.bias]

Loading weights: 1%|▏ | 2/148 [00:00<00:00, 2873.80it/s, Materializing param=transformer.h.0.attn.c_attn.weight]

Loading weights: 1%|▏ | 2/148 [00:00<00:00, 784.50it/s, Materializing param=transformer.h.0.attn.c_attn.weight]

Loading weights: 2%|▏ | 3/148 [00:00<00:00, 1016.55it/s, Materializing param=transformer.h.0.attn.c_proj.bias]

Loading weights: 2%|▏ | 3/148 [00:00<00:00, 786.14it/s, Materializing param=transformer.h.0.attn.c_proj.bias]

Loading weights: 3%|▎ | 4/148 [00:00<00:00, 910.27it/s, Materializing param=transformer.h.0.attn.c_proj.weight]

Loading weights: 3%|▎ | 4/148 [00:00<00:00, 807.26it/s, Materializing param=transformer.h.0.attn.c_proj.weight]

Loading weights: 3%|▎ | 5/148 [00:00<00:00, 884.54it/s, Materializing param=transformer.h.0.ln_1.bias]

Loading weights: 3%|▎ | 5/148 [00:00<00:00, 757.01it/s, Materializing param=transformer.h.0.ln_1.bias]

Loading weights: 4%|▍ | 6/148 [00:00<00:00, 753.08it/s, Materializing param=transformer.h.0.ln_1.weight]

Loading weights: 4%|▍ | 6/148 [00:00<00:00, 734.02it/s, Materializing param=transformer.h.0.ln_1.weight]

Loading weights: 5%|▍ | 7/148 [00:00<00:00, 738.36it/s, Materializing param=transformer.h.0.ln_2.bias]

Loading weights: 5%|▍ | 7/148 [00:00<00:00, 722.00it/s, Materializing param=transformer.h.0.ln_2.bias]

Loading weights: 5%|▌ | 8/148 [00:00<00:00, 768.75it/s, Materializing param=transformer.h.0.ln_2.weight]

Loading weights: 5%|▌ | 8/148 [00:00<00:00, 695.49it/s, Materializing param=transformer.h.0.ln_2.weight]

Loading weights: 6%|▌ | 9/148 [00:00<00:00, 727.53it/s, Materializing param=transformer.h.0.mlp.c_fc.bias]

Loading weights: 6%|▌ | 9/148 [00:00<00:00, 715.70it/s, Materializing param=transformer.h.0.mlp.c_fc.bias]

Loading weights: 7%|▋ | 10/148 [00:00<00:00, 759.11it/s, Materializing param=transformer.h.0.mlp.c_fc.weight]

Loading weights: 7%|▋ | 10/148 [00:00<00:00, 748.64it/s, Materializing param=transformer.h.0.mlp.c_fc.weight]

Loading weights: 7%|▋ | 11/148 [00:00<00:00, 786.31it/s, Materializing param=transformer.h.0.mlp.c_proj.bias]

Loading weights: 7%|▋ | 11/148 [00:00<00:00, 748.02it/s, Materializing param=transformer.h.0.mlp.c_proj.bias]

Loading weights: 8%|▊ | 12/148 [00:00<00:00, 795.18it/s, Materializing param=transformer.h.0.mlp.c_proj.weight]

Loading weights: 8%|▊ | 12/148 [00:00<00:00, 785.45it/s, Materializing param=transformer.h.0.mlp.c_proj.weight]

Loading weights: 9%|▉ | 13/148 [00:00<00:00, 771.39it/s, Materializing param=transformer.h.1.attn.c_attn.bias]

Loading weights: 9%|▉ | 13/148 [00:00<00:00, 749.73it/s, Materializing param=transformer.h.1.attn.c_attn.bias]

Loading weights: 9%|▉ | 14/148 [00:00<00:00, 774.86it/s, Materializing param=transformer.h.1.attn.c_attn.weight]

Loading weights: 9%|▉ | 14/148 [00:00<00:00, 745.36it/s, Materializing param=transformer.h.1.attn.c_attn.weight]

Loading weights: 10%|█ | 15/148 [00:00<00:00, 774.02it/s, Materializing param=transformer.h.1.attn.c_proj.bias]

Loading weights: 10%|█ | 15/148 [00:00<00:00, 757.11it/s, Materializing param=transformer.h.1.attn.c_proj.bias]

Loading weights: 11%|█ | 16/148 [00:00<00:00, 774.34it/s, Materializing param=transformer.h.1.attn.c_proj.weight]

Loading weights: 11%|█ | 16/148 [00:00<00:00, 747.90it/s, Materializing param=transformer.h.1.attn.c_proj.weight]

Loading weights: 11%|█▏ | 17/148 [00:00<00:00, 769.83it/s, Materializing param=transformer.h.1.ln_1.bias]

Loading weights: 11%|█▏ | 17/148 [00:00<00:00, 753.51it/s, Materializing param=transformer.h.1.ln_1.bias]

Loading weights: 12%|█▏ | 18/148 [00:00<00:00, 737.19it/s, Materializing param=transformer.h.1.ln_1.weight]

Loading weights: 12%|█▏ | 18/148 [00:00<00:00, 713.96it/s, Materializing param=transformer.h.1.ln_1.weight]

Loading weights: 13%|█▎ | 19/148 [00:00<00:00, 726.48it/s, Materializing param=transformer.h.1.ln_2.bias]

Loading weights: 13%|█▎ | 19/148 [00:00<00:00, 705.46it/s, Materializing param=transformer.h.1.ln_2.bias]

Loading weights: 14%|█▎ | 20/148 [00:00<00:00, 699.64it/s, Materializing param=transformer.h.1.ln_2.weight]

Loading weights: 14%|█▎ | 20/148 [00:00<00:00, 695.04it/s, Materializing param=transformer.h.1.ln_2.weight]

Loading weights: 14%|█▍ | 21/148 [00:00<00:00, 721.75it/s, Materializing param=transformer.h.1.mlp.c_fc.bias]

Loading weights: 14%|█▍ | 21/148 [00:00<00:00, 717.14it/s, Materializing param=transformer.h.1.mlp.c_fc.bias]

Loading weights: 15%|█▍ | 22/148 [00:00<00:00, 725.70it/s, Materializing param=transformer.h.1.mlp.c_fc.weight]

Loading weights: 15%|█▍ | 22/148 [00:00<00:00, 712.65it/s, Materializing param=transformer.h.1.mlp.c_fc.weight]

Loading weights: 16%|█▌ | 23/148 [00:00<00:00, 688.13it/s, Materializing param=transformer.h.1.mlp.c_proj.bias]

Loading weights: 16%|█▌ | 23/148 [00:00<00:00, 676.04it/s, Materializing param=transformer.h.1.mlp.c_proj.bias]

Loading weights: 16%|█▌ | 24/148 [00:00<00:00, 672.50it/s, Materializing param=transformer.h.1.mlp.c_proj.weight]

Loading weights: 16%|█▌ | 24/148 [00:00<00:00, 663.18it/s, Materializing param=transformer.h.1.mlp.c_proj.weight]

Loading weights: 17%|█▋ | 25/148 [00:00<00:00, 673.14it/s, Materializing param=transformer.h.2.attn.c_attn.bias]

Loading weights: 17%|█▋ | 25/148 [00:00<00:00, 651.47it/s, Materializing param=transformer.h.2.attn.c_attn.bias]

Loading weights: 18%|█▊ | 26/148 [00:00<00:00, 666.41it/s, Materializing param=transformer.h.2.attn.c_attn.weight]

Loading weights: 18%|█▊ | 26/148 [00:00<00:00, 650.48it/s, Materializing param=transformer.h.2.attn.c_attn.weight]

Loading weights: 18%|█▊ | 27/148 [00:00<00:00, 664.93it/s, Materializing param=transformer.h.2.attn.c_proj.bias]

Loading weights: 18%|█▊ | 27/148 [00:00<00:00, 656.50it/s, Materializing param=transformer.h.2.attn.c_proj.bias]

Loading weights: 19%|█▉ | 28/148 [00:00<00:00, 672.38it/s, Materializing param=transformer.h.2.attn.c_proj.weight]

Loading weights: 19%|█▉ | 28/148 [00:00<00:00, 665.06it/s, Materializing param=transformer.h.2.attn.c_proj.weight]

Loading weights: 20%|█▉ | 29/148 [00:00<00:00, 683.65it/s, Materializing param=transformer.h.2.ln_1.bias]

Loading weights: 20%|█▉ | 29/148 [00:00<00:00, 675.71it/s, Materializing param=transformer.h.2.ln_1.bias]

Loading weights: 20%|██ | 30/148 [00:00<00:00, 684.33it/s, Materializing param=transformer.h.2.ln_1.weight]

Loading weights: 20%|██ | 30/148 [00:00<00:00, 679.19it/s, Materializing param=transformer.h.2.ln_1.weight]

Loading weights: 21%|██ | 31/148 [00:00<00:00, 688.73it/s, Materializing param=transformer.h.2.ln_2.bias]

Loading weights: 21%|██ | 31/148 [00:00<00:00, 681.38it/s, Materializing param=transformer.h.2.ln_2.bias]

Loading weights: 22%|██▏ | 32/148 [00:00<00:00, 688.62it/s, Materializing param=transformer.h.2.ln_2.weight]

Loading weights: 22%|██▏ | 32/148 [00:00<00:00, 682.98it/s, Materializing param=transformer.h.2.ln_2.weight]

Loading weights: 22%|██▏ | 33/148 [00:00<00:00, 700.19it/s, Materializing param=transformer.h.2.mlp.c_fc.bias]

Loading weights: 22%|██▏ | 33/148 [00:00<00:00, 697.67it/s, Materializing param=transformer.h.2.mlp.c_fc.bias]

Loading weights: 23%|██▎ | 34/148 [00:00<00:00, 715.07it/s, Materializing param=transformer.h.2.mlp.c_fc.weight]

Loading weights: 23%|██▎ | 34/148 [00:00<00:00, 712.59it/s, Materializing param=transformer.h.2.mlp.c_fc.weight]

Loading weights: 24%|██▎ | 35/148 [00:00<00:00, 729.80it/s, Materializing param=transformer.h.2.mlp.c_proj.bias]

Loading weights: 24%|██▎ | 35/148 [00:00<00:00, 727.30it/s, Materializing param=transformer.h.2.mlp.c_proj.bias]

Loading weights: 24%|██▍ | 36/148 [00:00<00:00, 744.38it/s, Materializing param=transformer.h.2.mlp.c_proj.weight]

Loading weights: 24%|██▍ | 36/148 [00:00<00:00, 741.12it/s, Materializing param=transformer.h.2.mlp.c_proj.weight]

Loading weights: 25%|██▌ | 37/148 [00:00<00:00, 758.45it/s, Materializing param=transformer.h.3.attn.c_attn.bias]

Loading weights: 25%|██▌ | 37/148 [00:00<00:00, 756.09it/s, Materializing param=transformer.h.3.attn.c_attn.bias]

Loading weights: 26%|██▌ | 38/148 [00:00<00:00, 773.30it/s, Materializing param=transformer.h.3.attn.c_attn.weight]

Loading weights: 26%|██▌ | 38/148 [00:00<00:00, 770.91it/s, Materializing param=transformer.h.3.attn.c_attn.weight]

Loading weights: 26%|██▋ | 39/148 [00:00<00:00, 787.95it/s, Materializing param=transformer.h.3.attn.c_proj.bias]

Loading weights: 26%|██▋ | 39/148 [00:00<00:00, 785.56it/s, Materializing param=transformer.h.3.attn.c_proj.bias]

Loading weights: 27%|██▋ | 40/148 [00:00<00:00, 802.44it/s, Materializing param=transformer.h.3.attn.c_proj.weight]

Loading weights: 27%|██▋ | 40/148 [00:00<00:00, 800.03it/s, Materializing param=transformer.h.3.attn.c_proj.weight]

Loading weights: 28%|██▊ | 41/148 [00:00<00:00, 816.73it/s, Materializing param=transformer.h.3.ln_1.bias]

Loading weights: 28%|██▊ | 41/148 [00:00<00:00, 814.32it/s, Materializing param=transformer.h.3.ln_1.bias]

Loading weights: 28%|██▊ | 42/148 [00:00<00:00, 830.84it/s, Materializing param=transformer.h.3.ln_1.weight]

Loading weights: 28%|██▊ | 42/148 [00:00<00:00, 828.40it/s, Materializing param=transformer.h.3.ln_1.weight]

Loading weights: 29%|██▉ | 43/148 [00:00<00:00, 844.83it/s, Materializing param=transformer.h.3.ln_2.bias]

Loading weights: 29%|██▉ | 43/148 [00:00<00:00, 842.19it/s, Materializing param=transformer.h.3.ln_2.bias]

Loading weights: 30%|██▉ | 44/148 [00:00<00:00, 858.45it/s, Materializing param=transformer.h.3.ln_2.weight]

Loading weights: 30%|██▉ | 44/148 [00:00<00:00, 855.95it/s, Materializing param=transformer.h.3.ln_2.weight]

Loading weights: 30%|███ | 45/148 [00:00<00:00, 872.03it/s, Materializing param=transformer.h.3.mlp.c_fc.bias]

Loading weights: 30%|███ | 45/148 [00:00<00:00, 869.52it/s, Materializing param=transformer.h.3.mlp.c_fc.bias]

Loading weights: 31%|███ | 46/148 [00:00<00:00, 885.43it/s, Materializing param=transformer.h.3.mlp.c_fc.weight]

Loading weights: 31%|███ | 46/148 [00:00<00:00, 882.90it/s, Materializing param=transformer.h.3.mlp.c_fc.weight]

Loading weights: 32%|███▏ | 47/148 [00:00<00:00, 898.68it/s, Materializing param=transformer.h.3.mlp.c_proj.bias]

Loading weights: 32%|███▏ | 47/148 [00:00<00:00, 896.09it/s, Materializing param=transformer.h.3.mlp.c_proj.bias]

Loading weights: 32%|███▏ | 48/148 [00:00<00:00, 911.62it/s, Materializing param=transformer.h.3.mlp.c_proj.weight]

Loading weights: 32%|███▏ | 48/148 [00:00<00:00, 909.03it/s, Materializing param=transformer.h.3.mlp.c_proj.weight]

Loading weights: 33%|███▎ | 49/148 [00:00<00:00, 924.47it/s, Materializing param=transformer.h.4.attn.c_attn.bias]

Loading weights: 33%|███▎ | 49/148 [00:00<00:00, 921.87it/s, Materializing param=transformer.h.4.attn.c_attn.bias]

Loading weights: 34%|███▍ | 50/148 [00:00<00:00, 937.12it/s, Materializing param=transformer.h.4.attn.c_attn.weight]

Loading weights: 34%|███▍ | 50/148 [00:00<00:00, 934.46it/s, Materializing param=transformer.h.4.attn.c_attn.weight]

Loading weights: 34%|███▍ | 51/148 [00:00<00:00, 949.52it/s, Materializing param=transformer.h.4.attn.c_proj.bias]

Loading weights: 34%|███▍ | 51/148 [00:00<00:00, 946.88it/s, Materializing param=transformer.h.4.attn.c_proj.bias]

Loading weights: 35%|███▌ | 52/148 [00:00<00:00, 961.82it/s, Materializing param=transformer.h.4.attn.c_proj.weight]

Loading weights: 35%|███▌ | 52/148 [00:00<00:00, 959.15it/s, Materializing param=transformer.h.4.attn.c_proj.weight]

Loading weights: 36%|███▌ | 53/148 [00:00<00:00, 974.00it/s, Materializing param=transformer.h.4.ln_1.bias]

Loading weights: 36%|███▌ | 53/148 [00:00<00:00, 971.33it/s, Materializing param=transformer.h.4.ln_1.bias]

Loading weights: 36%|███▋ | 54/148 [00:00<00:00, 986.10it/s, Materializing param=transformer.h.4.ln_1.weight]

Loading weights: 36%|███▋ | 54/148 [00:00<00:00, 983.41it/s, Materializing param=transformer.h.4.ln_1.weight]

Loading weights: 37%|███▋ | 55/148 [00:00<00:00, 997.81it/s, Materializing param=transformer.h.4.ln_2.bias]

Loading weights: 37%|███▋ | 55/148 [00:00<00:00, 995.08it/s, Materializing param=transformer.h.4.ln_2.bias]

Loading weights: 38%|███▊ | 56/148 [00:00<00:00, 1009.60it/s, Materializing param=transformer.h.4.ln_2.weight]

Loading weights: 38%|███▊ | 56/148 [00:00<00:00, 1006.88it/s, Materializing param=transformer.h.4.ln_2.weight]

Loading weights: 39%|███▊ | 57/148 [00:00<00:00, 1021.27it/s, Materializing param=transformer.h.4.mlp.c_fc.bias]

Loading weights: 39%|███▊ | 57/148 [00:00<00:00, 1018.53it/s, Materializing param=transformer.h.4.mlp.c_fc.bias]

Loading weights: 39%|███▉ | 58/148 [00:00<00:00, 1032.72it/s, Materializing param=transformer.h.4.mlp.c_fc.weight]

Loading weights: 39%|███▉ | 58/148 [00:00<00:00, 1029.67it/s, Materializing param=transformer.h.4.mlp.c_fc.weight]

Loading weights: 40%|███▉ | 59/148 [00:00<00:00, 1043.69it/s, Materializing param=transformer.h.4.mlp.c_proj.bias]

Loading weights: 40%|███▉ | 59/148 [00:00<00:00, 1040.93it/s, Materializing param=transformer.h.4.mlp.c_proj.bias]

Loading weights: 41%|████ | 60/148 [00:00<00:00, 1054.87it/s, Materializing param=transformer.h.4.mlp.c_proj.weight]

Loading weights: 41%|████ | 60/148 [00:00<00:00, 1052.08it/s, Materializing param=transformer.h.4.mlp.c_proj.weight]

Loading weights: 41%|████ | 61/148 [00:00<00:00, 1065.90it/s, Materializing param=transformer.h.5.attn.c_attn.bias]

Loading weights: 41%|████ | 61/148 [00:00<00:00, 1063.09it/s, Materializing param=transformer.h.5.attn.c_attn.bias]

Loading weights: 42%|████▏ | 62/148 [00:00<00:00, 1076.73it/s, Materializing param=transformer.h.5.attn.c_attn.weight]

Loading weights: 42%|████▏ | 62/148 [00:00<00:00, 1073.91it/s, Materializing param=transformer.h.5.attn.c_attn.weight]

Loading weights: 43%|████▎ | 63/148 [00:00<00:00, 1087.00it/s, Materializing param=transformer.h.5.attn.c_proj.bias]

Loading weights: 43%|████▎ | 63/148 [00:00<00:00, 1083.73it/s, Materializing param=transformer.h.5.attn.c_proj.bias]

Loading weights: 43%|████▎ | 64/148 [00:00<00:00, 1096.51it/s, Materializing param=transformer.h.5.attn.c_proj.weight]

Loading weights: 43%|████▎ | 64/148 [00:00<00:00, 1093.31it/s, Materializing param=transformer.h.5.attn.c_proj.weight]

Loading weights: 44%|████▍ | 65/148 [00:00<00:00, 1105.96it/s, Materializing param=transformer.h.5.ln_1.bias]

Loading weights: 44%|████▍ | 65/148 [00:00<00:00, 1102.84it/s, Materializing param=transformer.h.5.ln_1.bias]

Loading weights: 45%|████▍ | 66/148 [00:00<00:00, 1115.26it/s, Materializing param=transformer.h.5.ln_1.weight]

Loading weights: 45%|████▍ | 66/148 [00:00<00:00, 1111.66it/s, Materializing param=transformer.h.5.ln_1.weight]

Loading weights: 45%|████▌ | 67/148 [00:00<00:00, 1124.18it/s, Materializing param=transformer.h.5.ln_2.bias]

Loading weights: 45%|████▌ | 67/148 [00:00<00:00, 1120.98it/s, Materializing param=transformer.h.5.ln_2.bias]

Loading weights: 46%|████▌ | 68/148 [00:00<00:00, 1133.32it/s, Materializing param=transformer.h.5.ln_2.weight]

Loading weights: 46%|████▌ | 68/148 [00:00<00:00, 1130.11it/s, Materializing param=transformer.h.5.ln_2.weight]

Loading weights: 47%|████▋ | 69/148 [00:00<00:00, 1142.37it/s, Materializing param=transformer.h.5.mlp.c_fc.bias]

Loading weights: 47%|████▋ | 69/148 [00:00<00:00, 1139.10it/s, Materializing param=transformer.h.5.mlp.c_fc.bias]

Loading weights: 47%|████▋ | 70/148 [00:00<00:00, 1151.13it/s, Materializing param=transformer.h.5.mlp.c_fc.weight]

Loading weights: 47%|████▋ | 70/148 [00:00<00:00, 1147.96it/s, Materializing param=transformer.h.5.mlp.c_fc.weight]

Loading weights: 48%|████▊ | 71/148 [00:00<00:00, 1159.97it/s, Materializing param=transformer.h.5.mlp.c_proj.bias]

Loading weights: 48%|████▊ | 71/148 [00:00<00:00, 1156.76it/s, Materializing param=transformer.h.5.mlp.c_proj.bias]

Loading weights: 49%|████▊ | 72/148 [00:00<00:00, 1168.64it/s, Materializing param=transformer.h.5.mlp.c_proj.weight]

Loading weights: 49%|████▊ | 72/148 [00:00<00:00, 1165.39it/s, Materializing param=transformer.h.5.mlp.c_proj.weight]

Loading weights: 49%|████▉ | 73/148 [00:00<00:00, 1177.13it/s, Materializing param=transformer.h.6.attn.c_attn.bias]

Loading weights: 49%|████▉ | 73/148 [00:00<00:00, 1173.97it/s, Materializing param=transformer.h.6.attn.c_attn.bias]

Loading weights: 50%|█████ | 74/148 [00:00<00:00, 1185.51it/s, Materializing param=transformer.h.6.attn.c_attn.weight]

Loading weights: 50%|█████ | 74/148 [00:00<00:00, 1182.33it/s, Materializing param=transformer.h.6.attn.c_attn.weight]

Loading weights: 51%|█████ | 75/148 [00:00<00:00, 1193.83it/s, Materializing param=transformer.h.6.attn.c_proj.bias]

Loading weights: 51%|█████ | 75/148 [00:00<00:00, 1190.66it/s, Materializing param=transformer.h.6.attn.c_proj.bias]

Loading weights: 51%|█████▏ | 76/148 [00:00<00:00, 1201.97it/s, Materializing param=transformer.h.6.attn.c_proj.weight]

Loading weights: 51%|█████▏ | 76/148 [00:00<00:00, 1198.72it/s, Materializing param=transformer.h.6.attn.c_proj.weight]

Loading weights: 52%|█████▏ | 77/148 [00:00<00:00, 1210.15it/s, Materializing param=transformer.h.6.ln_1.bias]

Loading weights: 52%|█████▏ | 77/148 [00:00<00:00, 1206.85it/s, Materializing param=transformer.h.6.ln_1.bias]

Loading weights: 53%|█████▎ | 78/148 [00:00<00:00, 1218.10it/s, Materializing param=transformer.h.6.ln_1.weight]

Loading weights: 53%|█████▎ | 78/148 [00:00<00:00, 1214.85it/s, Materializing param=transformer.h.6.ln_1.weight]

Loading weights: 53%|█████▎ | 79/148 [00:00<00:00, 1226.14it/s, Materializing param=transformer.h.6.ln_2.bias]

Loading weights: 53%|█████▎ | 79/148 [00:00<00:00, 1222.76it/s, Materializing param=transformer.h.6.ln_2.bias]

Loading weights: 54%|█████▍ | 80/148 [00:00<00:00, 1233.91it/s, Materializing param=transformer.h.6.ln_2.weight]

Loading weights: 54%|█████▍ | 80/148 [00:00<00:00, 1230.65it/s, Materializing param=transformer.h.6.ln_2.weight]

Loading weights: 55%|█████▍ | 81/148 [00:00<00:00, 1241.73it/s, Materializing param=transformer.h.6.mlp.c_fc.bias]

Loading weights: 55%|█████▍ | 81/148 [00:00<00:00, 1238.40it/s, Materializing param=transformer.h.6.mlp.c_fc.bias]

Loading weights: 55%|█████▌ | 82/148 [00:00<00:00, 1249.28it/s, Materializing param=transformer.h.6.mlp.c_fc.weight]

Loading weights: 55%|█████▌ | 82/148 [00:00<00:00, 1245.90it/s, Materializing param=transformer.h.6.mlp.c_fc.weight]

Loading weights: 56%|█████▌ | 83/148 [00:00<00:00, 1256.68it/s, Materializing param=transformer.h.6.mlp.c_proj.bias]

Loading weights: 56%|█████▌ | 83/148 [00:00<00:00, 1253.47it/s, Materializing param=transformer.h.6.mlp.c_proj.bias]

Loading weights: 57%|█████▋ | 84/148 [00:00<00:00, 1263.79it/s, Materializing param=transformer.h.6.mlp.c_proj.weight]

Loading weights: 57%|█████▋ | 84/148 [00:00<00:00, 1260.48it/s, Materializing param=transformer.h.6.mlp.c_proj.weight]

Loading weights: 57%|█████▋ | 85/148 [00:00<00:00, 1271.13it/s, Materializing param=transformer.h.7.attn.c_attn.bias]

Loading weights: 57%|█████▋ | 85/148 [00:00<00:00, 1267.58it/s, Materializing param=transformer.h.7.attn.c_attn.bias]

Loading weights: 58%|█████▊ | 86/148 [00:00<00:00, 1277.96it/s, Materializing param=transformer.h.7.attn.c_attn.weight]

Loading weights: 58%|█████▊ | 86/148 [00:00<00:00, 1274.75it/s, Materializing param=transformer.h.7.attn.c_attn.weight]

Loading weights: 59%|█████▉ | 87/148 [00:00<00:00, 1285.29it/s, Materializing param=transformer.h.7.attn.c_proj.bias]

Loading weights: 59%|█████▉ | 87/148 [00:00<00:00, 1282.02it/s, Materializing param=transformer.h.7.attn.c_proj.bias]

Loading weights: 59%|█████▉ | 88/148 [00:00<00:00, 1292.36it/s, Materializing param=transformer.h.7.attn.c_proj.weight]

Loading weights: 59%|█████▉ | 88/148 [00:00<00:00, 1289.13it/s, Materializing param=transformer.h.7.attn.c_proj.weight]

Loading weights: 60%|██████ | 89/148 [00:00<00:00, 1299.38it/s, Materializing param=transformer.h.7.ln_1.bias]

Loading weights: 60%|██████ | 89/148 [00:00<00:00, 1296.13it/s, Materializing param=transformer.h.7.ln_1.bias]

Loading weights: 61%|██████ | 90/148 [00:00<00:00, 1306.40it/s, Materializing param=transformer.h.7.ln_1.weight]

Loading weights: 61%|██████ | 90/148 [00:00<00:00, 1303.22it/s, Materializing param=transformer.h.7.ln_1.weight]

Loading weights: 61%|██████▏ | 91/148 [00:00<00:00, 1313.44it/s, Materializing param=transformer.h.7.ln_2.bias]

Loading weights: 61%|██████▏ | 91/148 [00:00<00:00, 1310.16it/s, Materializing param=transformer.h.7.ln_2.bias]

Loading weights: 62%|██████▏ | 92/148 [00:00<00:00, 1320.19it/s, Materializing param=transformer.h.7.ln_2.weight]

Loading weights: 62%|██████▏ | 92/148 [00:00<00:00, 1316.95it/s, Materializing param=transformer.h.7.ln_2.weight]

Loading weights: 63%|██████▎ | 93/148 [00:00<00:00, 1327.01it/s, Materializing param=transformer.h.7.mlp.c_fc.bias]

Loading weights: 63%|██████▎ | 93/148 [00:00<00:00, 1323.76it/s, Materializing param=transformer.h.7.mlp.c_fc.bias]

Loading weights: 64%|██████▎ | 94/148 [00:00<00:00, 1333.71it/s, Materializing param=transformer.h.7.mlp.c_fc.weight]

Loading weights: 64%|██████▎ | 94/148 [00:00<00:00, 1330.46it/s, Materializing param=transformer.h.7.mlp.c_fc.weight]

Loading weights: 64%|██████▍ | 95/148 [00:00<00:00, 1340.31it/s, Materializing param=transformer.h.7.mlp.c_proj.bias]

Loading weights: 64%|██████▍ | 95/148 [00:00<00:00, 1336.77it/s, Materializing param=transformer.h.7.mlp.c_proj.bias]

Loading weights: 65%|██████▍ | 96/148 [00:00<00:00, 1346.44it/s, Materializing param=transformer.h.7.mlp.c_proj.weight]

Loading weights: 65%|██████▍ | 96/148 [00:00<00:00, 1343.21it/s, Materializing param=transformer.h.7.mlp.c_proj.weight]

Loading weights: 66%|██████▌ | 97/148 [00:00<00:00, 1352.55it/s, Materializing param=transformer.h.8.attn.c_attn.bias]

Loading weights: 66%|██████▌ | 97/148 [00:00<00:00, 1349.52it/s, Materializing param=transformer.h.8.attn.c_attn.bias]

Loading weights: 66%|██████▌ | 98/148 [00:00<00:00, 1358.92it/s, Materializing param=transformer.h.8.attn.c_attn.weight]

Loading weights: 66%|██████▌ | 98/148 [00:00<00:00, 1355.65it/s, Materializing param=transformer.h.8.attn.c_attn.weight]

Loading weights: 67%|██████▋ | 99/148 [00:00<00:00, 1365.08it/s, Materializing param=transformer.h.8.attn.c_proj.bias]

Loading weights: 67%|██████▋ | 99/148 [00:00<00:00, 1361.93it/s, Materializing param=transformer.h.8.attn.c_proj.bias]

Loading weights: 68%|██████▊ | 100/148 [00:00<00:00, 1371.47it/s, Materializing param=transformer.h.8.attn.c_proj.weight]

Loading weights: 68%|██████▊ | 100/148 [00:00<00:00, 1368.33it/s, Materializing param=transformer.h.8.attn.c_proj.weight]

Loading weights: 68%|██████▊ | 101/148 [00:00<00:00, 1377.82it/s, Materializing param=transformer.h.8.ln_1.bias]

Loading weights: 68%|██████▊ | 101/148 [00:00<00:00, 1374.74it/s, Materializing param=transformer.h.8.ln_1.bias]

Loading weights: 69%|██████▉ | 102/148 [00:00<00:00, 1384.09it/s, Materializing param=transformer.h.8.ln_1.weight]

Loading weights: 69%|██████▉ | 102/148 [00:00<00:00, 1381.05it/s, Materializing param=transformer.h.8.ln_1.weight]

Loading weights: 70%|██████▉ | 103/148 [00:00<00:00, 1390.26it/s, Materializing param=transformer.h.8.ln_2.bias]

Loading weights: 70%|██████▉ | 103/148 [00:00<00:00, 1387.25it/s, Materializing param=transformer.h.8.ln_2.bias]

Loading weights: 70%|███████ | 104/148 [00:00<00:00, 1396.57it/s, Materializing param=transformer.h.8.ln_2.weight]

Loading weights: 70%|███████ | 104/148 [00:00<00:00, 1393.42it/s, Materializing param=transformer.h.8.ln_2.weight]

Loading weights: 71%|███████ | 105/148 [00:00<00:00, 1402.61it/s, Materializing param=transformer.h.8.mlp.c_fc.bias]

Loading weights: 71%|███████ | 105/148 [00:00<00:00, 1399.35it/s, Materializing param=transformer.h.8.mlp.c_fc.bias]

Loading weights: 72%|███████▏ | 106/148 [00:00<00:00, 1408.26it/s, Materializing param=transformer.h.8.mlp.c_fc.weight]

Loading weights: 72%|███████▏ | 106/148 [00:00<00:00, 1405.27it/s, Materializing param=transformer.h.8.mlp.c_fc.weight]

Loading weights: 72%|███████▏ | 107/148 [00:00<00:00, 1414.34it/s, Materializing param=transformer.h.8.mlp.c_proj.bias]

Loading weights: 72%|███████▏ | 107/148 [00:00<00:00, 1411.26it/s, Materializing param=transformer.h.8.mlp.c_proj.bias]

Loading weights: 73%|███████▎ | 108/148 [00:00<00:00, 1420.30it/s, Materializing param=transformer.h.8.mlp.c_proj.weight]

Loading weights: 73%|███████▎ | 108/148 [00:00<00:00, 1417.15it/s, Materializing param=transformer.h.8.mlp.c_proj.weight]

Loading weights: 74%|███████▎ | 109/148 [00:00<00:00, 1425.57it/s, Materializing param=transformer.h.9.attn.c_attn.bias]

Loading weights: 74%|███████▎ | 109/148 [00:00<00:00, 1422.50it/s, Materializing param=transformer.h.9.attn.c_attn.bias]

Loading weights: 74%|███████▍ | 110/148 [00:00<00:00, 1431.29it/s, Materializing param=transformer.h.9.attn.c_attn.weight]

Loading weights: 74%|███████▍ | 110/148 [00:00<00:00, 1428.26it/s, Materializing param=transformer.h.9.attn.c_attn.weight]

Loading weights: 75%|███████▌ | 111/148 [00:00<00:00, 1437.15it/s, Materializing param=transformer.h.9.attn.c_proj.bias]

Loading weights: 75%|███████▌ | 111/148 [00:00<00:00, 1434.13it/s, Materializing param=transformer.h.9.attn.c_proj.bias]

Loading weights: 76%|███████▌ | 112/148 [00:00<00:00, 1442.83it/s, Materializing param=transformer.h.9.attn.c_proj.weight]

Loading weights: 76%|███████▌ | 112/148 [00:00<00:00, 1439.75it/s, Materializing param=transformer.h.9.attn.c_proj.weight]

Loading weights: 76%|███████▋ | 113/148 [00:00<00:00, 1448.38it/s, Materializing param=transformer.h.9.ln_1.bias]

Loading weights: 76%|███████▋ | 113/148 [00:00<00:00, 1445.32it/s, Materializing param=transformer.h.9.ln_1.bias]

Loading weights: 77%|███████▋ | 114/148 [00:00<00:00, 1454.03it/s, Materializing param=transformer.h.9.ln_1.weight]

Loading weights: 77%|███████▋ | 114/148 [00:00<00:00, 1450.96it/s, Materializing param=transformer.h.9.ln_1.weight]

Loading weights: 78%|███████▊ | 115/148 [00:00<00:00, 1459.59it/s, Materializing param=transformer.h.9.ln_2.bias]

Loading weights: 78%|███████▊ | 115/148 [00:00<00:00, 1456.54it/s, Materializing param=transformer.h.9.ln_2.bias]

Loading weights: 78%|███████▊ | 116/148 [00:00<00:00, 1464.89it/s, Materializing param=transformer.h.9.ln_2.weight]

Loading weights: 78%|███████▊ | 116/148 [00:00<00:00, 1461.80it/s, Materializing param=transformer.h.9.ln_2.weight]

Loading weights: 79%|███████▉ | 117/148 [00:00<00:00, 1470.22it/s, Materializing param=transformer.h.9.mlp.c_fc.bias]

Loading weights: 79%|███████▉ | 117/148 [00:00<00:00, 1467.17it/s, Materializing param=transformer.h.9.mlp.c_fc.bias]

Loading weights: 80%|███████▉ | 118/148 [00:00<00:00, 1475.53it/s, Materializing param=transformer.h.9.mlp.c_fc.weight]

Loading weights: 80%|███████▉ | 118/148 [00:00<00:00, 1472.53it/s, Materializing param=transformer.h.9.mlp.c_fc.weight]

Loading weights: 80%|████████ | 119/148 [00:00<00:00, 1480.89it/s, Materializing param=transformer.h.9.mlp.c_proj.bias]

Loading weights: 80%|████████ | 119/148 [00:00<00:00, 1477.77it/s, Materializing param=transformer.h.9.mlp.c_proj.bias]

Loading weights: 81%|████████ | 120/148 [00:00<00:00, 1485.93it/s, Materializing param=transformer.h.9.mlp.c_proj.weight]

Loading weights: 81%|████████ | 120/148 [00:00<00:00, 1482.85it/s, Materializing param=transformer.h.9.mlp.c_proj.weight]

Loading weights: 82%|████████▏ | 121/148 [00:00<00:00, 1491.03it/s, Materializing param=transformer.h.10.attn.c_attn.bias]

Loading weights: 82%|████████▏ | 121/148 [00:00<00:00, 1487.93it/s, Materializing param=transformer.h.10.attn.c_attn.bias]

Loading weights: 82%|████████▏ | 122/148 [00:00<00:00, 1495.99it/s, Materializing param=transformer.h.10.attn.c_attn.weight]

Loading weights: 82%|████████▏ | 122/148 [00:00<00:00, 1492.95it/s, Materializing param=transformer.h.10.attn.c_attn.weight]

Loading weights: 83%|████████▎ | 123/148 [00:00<00:00, 1501.01it/s, Materializing param=transformer.h.10.attn.c_proj.bias]

Loading weights: 83%|████████▎ | 123/148 [00:00<00:00, 1497.90it/s, Materializing param=transformer.h.10.attn.c_proj.bias]

Loading weights: 84%|████████▍ | 124/148 [00:00<00:00, 1505.81it/s, Materializing param=transformer.h.10.attn.c_proj.weight]

Loading weights: 84%|████████▍ | 124/148 [00:00<00:00, 1502.78it/s, Materializing param=transformer.h.10.attn.c_proj.weight]

Loading weights: 84%|████████▍ | 125/148 [00:00<00:00, 1510.65it/s, Materializing param=transformer.h.10.ln_1.bias]

Loading weights: 84%|████████▍ | 125/148 [00:00<00:00, 1507.63it/s, Materializing param=transformer.h.10.ln_1.bias]

Loading weights: 85%|████████▌ | 126/148 [00:00<00:00, 1515.35it/s, Materializing param=transformer.h.10.ln_1.weight]

Loading weights: 85%|████████▌ | 126/148 [00:00<00:00, 1512.31it/s, Materializing param=transformer.h.10.ln_1.weight]

Loading weights: 86%|████████▌ | 127/148 [00:00<00:00, 1520.16it/s, Materializing param=transformer.h.10.ln_2.bias]

Loading weights: 86%|████████▌ | 127/148 [00:00<00:00, 1517.14it/s, Materializing param=transformer.h.10.ln_2.bias]

Loading weights: 86%|████████▋ | 128/148 [00:00<00:00, 1524.99it/s, Materializing param=transformer.h.10.ln_2.weight]

Loading weights: 86%|████████▋ | 128/148 [00:00<00:00, 1521.97it/s, Materializing param=transformer.h.10.ln_2.weight]

Loading weights: 87%|████████▋ | 129/148 [00:00<00:00, 1529.79it/s, Materializing param=transformer.h.10.mlp.c_fc.bias]

Loading weights: 87%|████████▋ | 129/148 [00:00<00:00, 1526.80it/s, Materializing param=transformer.h.10.mlp.c_fc.bias]

Loading weights: 88%|████████▊ | 130/148 [00:00<00:00, 1534.49it/s, Materializing param=transformer.h.10.mlp.c_fc.weight]

Loading weights: 88%|████████▊ | 130/148 [00:00<00:00, 1531.49it/s, Materializing param=transformer.h.10.mlp.c_fc.weight]

Loading weights: 89%|████████▊ | 131/148 [00:00<00:00, 1539.16it/s, Materializing param=transformer.h.10.mlp.c_proj.bias]

Loading weights: 89%|████████▊ | 131/148 [00:00<00:00, 1536.16it/s, Materializing param=transformer.h.10.mlp.c_proj.bias]

Loading weights: 89%|████████▉ | 132/148 [00:00<00:00, 1543.80it/s, Materializing param=transformer.h.10.mlp.c_proj.weight]

Loading weights: 89%|████████▉ | 132/148 [00:00<00:00, 1540.75it/s, Materializing param=transformer.h.10.mlp.c_proj.weight]

Loading weights: 90%|████████▉ | 133/148 [00:00<00:00, 1548.25it/s, Materializing param=transformer.h.11.attn.c_attn.bias]