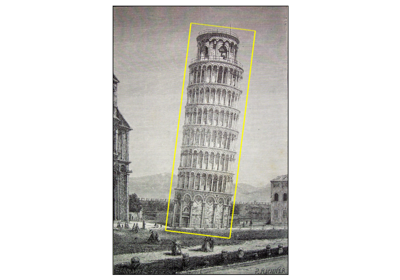

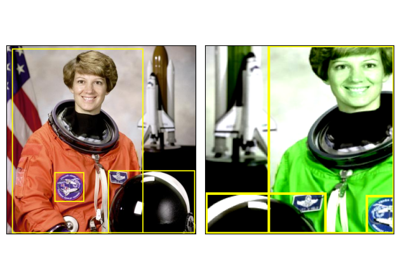

BoundingBoxes¶

- class torchvision.tv_tensors.BoundingBoxes(data: Any, *, format: torchvision.tv_tensors._bounding_boxes.BoundingBoxFormat | str, canvas_size: tuple[int, int], clamping_mode: Optional[str] = 'soft', dtype: Optional[dtype] = None, device: Optional[Union[device, str, int]] = None, requires_grad: Optional[bool] = None)[source]¶

torch.Tensorsubclass for bounding boxes with shape[N, K].Note

Support for rotated bounding boxes was released in TorchVision 0.23 and is currently a BETA feature. We don’t expect the API to change, but there may be some rare edge-cases. If you find any issues, please report them on our bug tracker: https://github.com/pytorch/vision/issues?q=is:open+is:issue

Where

Nis the number of bounding boxes andKis 4 for unrotated boxes, and 5 or 8 for rotated boxes.Note

There should be only one

BoundingBoxesinstance per sample e.g.{"img": img, "bbox": BoundingBoxes(...)}, although oneBoundingBoxesobject can contain multiple bounding boxes.- Parameters:

data – Any data that can be turned into a tensor with

torch.as_tensor().format (BoundingBoxFormat, str) – Format of the bounding box.

canvas_size (two-tuple of python:ints) – Height and width of the corresponding image or video.

clamping_mode – The clamping mode to use when applying transforms that may result in bounding boxes partially outside of the image. Possible values are: “soft”, “hard”, or

None. Read more in Clamping Mode, and its effect on transforms.dtype (torch.dpython:type, optional) – Desired data type of the bounding box. If omitted, will be inferred from

data.device (torch.device, optional) – Desired device of the bounding box. If omitted and

datais atorch.Tensor, the device is taken from it. Otherwise, the bounding box is constructed on the CPU.requires_grad (bool, optional) – Whether autograd should record operations on the bounding box. If omitted and

datais atorch.Tensor, the value is taken from it. Otherwise, defaults toFalse.

Examples using

BoundingBoxes: