RandomZoomOut¶

- class torchvision.transforms.v2.RandomZoomOut(fill: Union[int, float, Sequence[int], Sequence[float], None, Dict[Union[Type, str], Optional[Union[int, float, Sequence[int], Sequence[float]]]]] = 0, side_range: Sequence[float] = (1.0, 4.0), p: float = 0.5)[source]¶

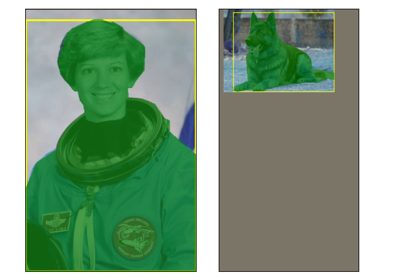

[BETA] “Zoom out” transformation from “SSD: Single Shot MultiBox Detector”.

Note

The RandomZoomOut transform is in Beta stage, and while we do not expect disruptive breaking changes, some APIs may slightly change according to user feedback. Please submit any feedback you may have in this issue: https://github.com/pytorch/vision/issues/6753.

This transformation randomly pads images, videos, bounding boxes and masks creating a zoom out effect. Output spatial size is randomly sampled from original size up to a maximum size configured with

side_rangeparameter:r = uniform_sample(side_range[0], side_range[1]) output_width = input_width * r output_height = input_height * r

If the input is a

torch.Tensoror aTVTensor(e.g.Image,Video,BoundingBoxesetc.) it can have arbitrary number of leading batch dimensions. For example, the image can have[..., C, H, W]shape. A bounding box can have[..., 4]shape.- Parameters:

fill (number or tuple or dict, optional) – Pixel fill value used when the

padding_modeis constant. Default is 0. If a tuple of length 3, it is used to fill R, G, B channels respectively. Fill value can be also a dictionary mapping data type to the fill value, e.g.fill={tv_tensors.Image: 127, tv_tensors.Mask: 0}whereImagewill be filled with 127 andMaskwill be filled with 0.side_range (sequence of python:floats, optional) – tuple of two floats defines minimum and maximum factors to scale the input size.

p (float, optional) – probability that the zoom operation will be performed.

Examples using

RandomZoomOut:

Transforms v2: End-to-end object detection/segmentation example

Transforms v2: End-to-end object detection/segmentation example